Friedrich Hayek called the hubristic ideas of social scientists, that they could explain and plan the details of society (including economic production), their "fatal conceit." He informally analyzed the division of knowledge to explain why the wide variety of businesses in our economy cannot be centrally planned. "The peculiar character of the problem of a rational economic order is determined precisely by the fact that the knowledge of the circumstances of which we must make use never exists in concentrated or integrated form but solely as the dispersed bits of incomplete and frequently contradictory knowledge which all the separate individuals possess." The economic problem is "a problem of the utilization of knowledge which is not given to anyone in its totality." Austrian economists like Hayek usually eschewed the use of traditional mathematics to describe the economy because such use assumes that economic complexities can be reduced to a small number of axioms.

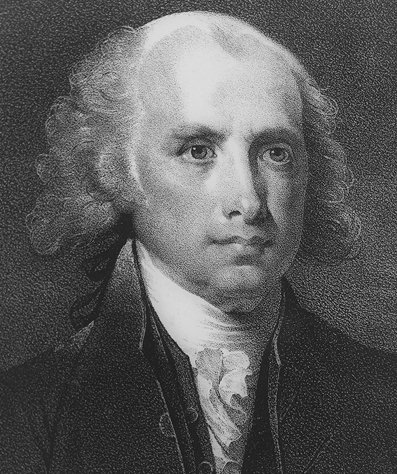

Friedrich Hayek, the Austrian economist and philosopher who discussed the use of knowledge in society.

Modern mathematics, however -- in particular algorithmic information theory -- clarifies the limits of mathematical reasoning, including models with infinite numbers of axioms. The mathematics of irreducible complexity can be used to formalize the Austrians' insights. Here is an introduction to algorithmic information theory, and further thoughts on measuring complexity.

Sometimes information comes in simple forms. The number 1, for example, is a simple piece of data. The number pi, although it has an infinite number of digits, is similarly simple, because it can be generated by a short finite algorithm (or computer program). That algorithm fully describes pi. However, a large random number has an irreducible complexity. Gregory Chaitin discovered a number, Chaitin's omega, which although it has a simple and clear definition (it's just a sum of probabilities that a random computer program will halt) has an irreducibly infinite complexity. Chaitin proved that there is no way to completely describe omega in a finite manner. Chaitin has thus shown that there is no way to reduce mathematics to a finite set of axioms. Any mathematical system based on a finite set of axioms (e.g. the system of simple algebra and calculus commonly used by non-Austrian economists) overly simplifies mathematical reality, much less social reality.

Furthermore, we know that the physical world contains vast amounts of irreducible complexity. The quantum mechanics of chemistry, the Brownian motions of the atmosphere, and so on create vast amounts of uncertainty and locally unique conditions. Medicine, for example, is filled with locally unique conditions often known only very incompletely by one or a few hyperspecialized physicians or scientists.

Ray Solomonoff and Gregory Chaitin, pioneers of algorithmic information theory.

The strategic nature of the social world means that it will contain irreducible complexity even if the physical world of production and the physical needs of consumption were simple. We can make life open-endedly complicated for each other by playing penny matching games. Furthermore, shared information might be false or deceptively incomplete.

Even if we were perfectly honest and altruistic with each other, we would still face economies of knowledge. A world of more diverse knowledge is far more valuable to us than a world where we all had the same skills and beliefs. This is the most important source of the irreducible complexity of knowledge: the wealthier we are, the greater the irreducibly complex amount of knowledge (i.e. diversity of knowledge) society has about the world and about itself. This entails more diversity of knowledge in different minds, and thus the greater difficulty of coordinating economic behavior.

The vastness of the useful knowledge in the world is far greater than our ability to store, organize, and communicate that knowledge. One limitation is simply how much our brains can hold. There is far more irreducible and important complexity in the world than can be held in a single brain. For this reason, at least some of this omplexity is impossible to share between human minds.

The channel capacity of human language and visual comprehension are further limited. This often makes it impossible to share irreducibly complex knowledge between human minds even if the mind could in theory store and comprehend that knowledge. The main barrier here is the inability to articulate tacit knowledge, rather than limitations of technology. However, the strategic and physical limits to reducing knowledge are of such vast proportions that most knowledge could not be fully shared even with ideal information technology. Indeed, economies of knowledge suggest that the proportion of knowledge would be even less widely shared in a very wealthy world of physically optimal computer and network technology than it is today -- although the absolute amount of knowledge shared would be far greater, the sum total of knowledge would be far greater still, and thus the proportion optimally shared would be smaller.

The limitations on the distribution of knowledge, combined with the inexhaustible sources of irreducible complexity, mean that the wealthier we get, the greater the unique knowledge stored in each mind and shared with few others, and the smaller fraction of knowledge is available to any one mind. There are a far greater variety of knowledge "pigeons" which must be stuffed into the same brain "pigeonholes," and thus less room for "cloning pigeons" through mass media, mass education, and the like. Wealthier societies take greater advantage of long tails (i.e., they satisfy a greater variety of preferences) and thus become even less plannable than poorer societies that are still focused on simpler areas such as agriculture and Newtonian industry. More advanced societies increasingly focus on areas such as interpersonal behaviors (sales, law, etc.) and medicine (the complexity of the genetic code is just the tip of the iceberg; the real challenge is the irreducibly complex quantum effects of biochemistry,