Using materials native to space, instead of hauling everything from Earth, is crucial to future efforts at large-scale space industrialization and colonization. At that time we will be using technologies far in advance of today's, but even now we can see the technology developing for use here on earth.

There are a myriad of materials we would like to process, including dirty organic-laden ice on comets and some asteroids, subsurface ice and the atmosphere of Mars, platinum-rich unoxidized nickel-iron metal regoliths on asteroids, etc. There are an even wider array of materials we would like to make. The first and most important is propellant, but eventually we want a wide array of manufacturing and construction inputs, including complex polymers like Kevlar and graphite epoxies for strong tethers.

The advantages of native propellant can be seen in two recent mission proposals. In several Mars mission proposals[1], H2 from Earth or Martian water is chemically processed with CO2 from the Martian atmosphere, making CH4 and O2 propellants for operations on Mars and the return trip to Earth. Even bringing H2 from Earth, this scheme can reduce the propellant mass to be launched from Earth by over 75%. Similarly, I have described a system that converts cometary or asteroidal ice into a cylindrical, zero-tank-mass thermal rocket. This can be used to transport large interplanetary payloads, including the valuable organic and volatile ices themselves into high Earth and Martian orbits.

Earthside chemical plants are usually far too heavy to launch on rockets into deep space. An important benchmarks for plants in space is the thruput mass/equipment mass, or mass thruput ratio (MTR). At first glance, it would seem that almost any system with MTR>1 would be worthwhile, but in real projects risk must be reduced through redundancy, time cost of money must be accounted for, equipment launched from earth must be affordable in the first place (typically <$5 billion) and must be amortized, and propellant burned must be accounted for. For deep-space missions, system MTRs typically need to be in the 100-10,000 per year range to be economical.A special consideration is the operation of chemical reactors in microgravity. So far all chemical reactors used in space -- mostly rocket engines, and various kinds of life support equipment in space stations -- have been designed for microgravity. However, Earthside chemical plants incorporate many processes that use gravity, and must be redesigned. Microgravity may be advantageous for some kinds of reactions; this is an active area of research. On moons or other plants, we are confronted with various fixed low levels of gravity that may be difficult to design for. With a spinning tethered satellite in free space, we can get the best of all worlds: microgravity, Earth gravity, or even hypergravity where desired.

A bigger challenge is developing chemical reactors that are small enough to launch on rockets, have high enough thruput to be affordable, and are flexible enough to produce the wide variety of products needed for space industry. A long-range ideal strategy is K. Eric Drexler's nanotechnology [2]. In this scenario small "techno-ribosomes", designed and built molecule by molecule, would use organic material in space to reproduce themselves and produce useful product. An intermediate technology, under experimental research today, uses lithography techniques on the nanometer scale to produce designer catalysts and microreactors. Lithography, the technique which has made possible the rapid improvement in computers since 1970, has moved into the deep submicron scale in the laboratory, and will soon be moving there commercially. Lab research is also applying lithography to the chemical industry, where it might enable breakthroughs to rival those it produced in electronics.

Tim May has described nanolithography that uses linear arrays of 1e4-1e5 AFM's that would scan a chip and fill in detail to 10 nm resolution or better. Elsewhere I have described a class of self-organizing molecules called _nanoresists_, which make possible the use of e-beams down to the 1 nm scale. Nanoresists range from ablatable films, to polymers, to biological structures. A wide variety of other nanolithography techniques are described in [4,5,6]. Small-scale lithography not only improves the feature density of existing devices, it also makes possible a wide variety of new devices that take advantage of quantum effects: glowing nanopore silicon, quantum dots ("designer atoms" with programmable electronic and optical properties), tunneling magnets, squeezed lasers, etc. Most important for our purposes, they make possible to mass production of tiny chemical reactors and designer catalysts. Lithography has been used to fabricate a series of catalytic towers on a chip [3]. The towers consist of alternating layers of SiO2 4.1 nm thick and Ni 2-10 nm thick. The deposition process achieves nearly one atom thickness control for both SiO2 and Ni. Previously it was thought that positioning in three dimensions was required for good catalysis, but this catalyst's nanoscale 1-d surface force reagants into the proper binding pattern. It achieved six times the reaction rate of traditional cluster catalysts on the hydrogenolysis of ethane to methane, C2H6 + H2 --> 2CH4. The thickness of the nickel and silicon dioxide layers can be varied to match the size of molecules to be reacted.

Catalysts need to have structures precisely designed to trap certain kinds of molecules, let others flow through, and keep still others out, all without getting clogged or poisoned. Currently these catalysts are built by growing crystals of the right spacing in bulk. Sometimes catalysts come from biotech, for example the bacteria used to grow the corn syrup in soda pop. Within this millenium (only 7.1 years left!) we will start to see catalysts built by new techniques of nanolithography, including AFM machining, AFM arrays and nanoresists Catalysts are critical to the oil industry, the chemical industry and to pollution control -- the worldwide market is in the $100's of billions per year and growing rapidly.

There is a also big market for micron-size chemical reactors. We may one day see the flexible chemical plant, with hundreds of nanoscale reactors on a chip, the channels between them reprogrammed via switchable valves, much as the circuits on a chip can be reprogrammed via transitors. Even a more modest, large version of such a plant could have a wide variety of uses.

Their first use may be in artificial organs to produce various biological molecules. For example, they might replace or augment the functionality of the kidneys, pancreas, liver, thyroid gland, etc. They might produce psychoactive chemicals inside the blood-brain barrier, for example dopamine to reverse Parkinson's disease. Biological and mechanical chemical reactors might work together, the first produced via metaboic engineering[7], the second via nanolithography.

After microreactors, metabolic engineering, and nanoscale catalysts have been developed for use on Earth, they will spin off for use in space. Microplants in space could manufacture propellant, a wide variety of industrial inputs and perform life support functions more efficiently. Over 95% of the mass we now launch into space could be replaced by these materials produced from comets, asteroids, Mars, etc. Even if Drexler's self-replicating assemblers are a long time in coming, nanolithographed tiny chemical reactors could open up the solar system.

====================

ref:

[1] _Case for Mars_ conference proceedings, Zubrin et. al.

papers on "Mars Direct"

[2] K. Eric Drexler, _Nanosystems_, John Wiley & Sons 1992

[3] Science 20 Nov. 1992, pg. 1337.

[4] Ferry et. al. eds., _Granular Nanoelectronics_, Plenum Press 1991

[5] Geis & Angus, "Diamond Film Semiconductors", Sci. Am. 10/92

[6] ???, "Quantum Dots", Sci. Am. 1/93

[7] Science 21 June 1991, pgs. 1668, 1675.

Pages

▼

Sunday, January 21, 2007

Chemical microreactors

Here's a bit of theoretical applied science I wrote back in 1993:

Tuesday, January 09, 2007

Supreme Court says IP licensees can sue before infringing

In today's decision in MedImmune v. Genentech, the U.S. Supreme Court held that (possibly subject to a caveat described below) a patent licensee can sue to get out of the license without having to infringe the patent first. In other words, the licensee can sue without stopping the license payments. This sounds like some minor procedural point. It is a procedural point but it is hardly minor. It dramatically changes the risks of infringement and invalidity, and on whom those risks are placed, in a licensing relationship.

The Court's opinion today reverses the doctrine of the Federal Circuit, the normal appeals court for U.S. patents, that had held that there was no standing for a patent licensee to sue until it had actually infringed the patent or breached the contract. This meant that the licensee had to risk triple damages (for intentional infringement) and other potential problems in order to have a court determine whether the patent was valid or being infringed. This could be harsh, and it has long been argued that such consequences coerce licensees into continuing to pay license fees for products that it has discovered are not really covered by the patent.

Given the fuzziness of the "metes and bounds" of patents, this is a common occurrence. Sometimes the licensee's engineers come up with an alternative design that seems to avoid the patent, but the licensee is too scared of triple damages if the court decides otherwise and sticks with the patented product and paying the license fees. At other times new prior art is discovered or some other research uncovers the probable invalidity of the patent. But enough uncertainty remains that , given the threat of triple damages, the licensee just keeps paying the license fees.

A caveat is that the licensor may have to first "threaten" the licensee somehow that they will take action if the licensee doesn't make the expected payments or introduces a new product not covered by the license. In this case that "threat" took the form of an opinion letter from the licensor that the licensee's new product was covered by the old patent, and thus that the licensee had to pay license fees for the new product

Justice Scalia wrote the opinion for the eight justice majority. He argued that if the only difference between a justiciable controversy (i.e. a case where the plaintiff has standing under "case or controversy" clause of the U.S Constitution) and a non-justiciable one (i.e. no standing to sue) is that the plaintiff chose not to violate the disputed law, then the plaintiff still has standing:

One of the main purposes of the Declaratory Judgments Act, under which such lawsuits are brought, is to avoid the necessity of committing an illegal act before the case can be brought to court:

Scalia, who is normally no fan of easy standing, extended this doctrine from disputes with the government to private disputes. For this he used as precedent Altvater v. Freeman:

Scalia as usual got to the point:

Scalia rebutted the argument that under freedom of contract the parties had a right to, and had here, created an "insurance policy" immunizing the licensor from declaratory lawsuits:

The lessons of this case also apply to copyright and other kinds of IP, albeit in different ways. In copyright one can be exposed to criminal sanctions for infringement so there is an even stronger case for declaratory lawsuits.

The most interesting issue is to what extent IP licensors will be able to "contract around" this holding and thus still be able to immunize themselves from pre-infringement lawsuits. It's possible that IP licensors will still be able to prevent their licensees from suing with the proper contractual language. Licensees on the other hand may want to insist on language that preserves their rights to sue for declaratory judgments on whether the IP they are licensing is valid or on whether they are really infringing it with activities for which they wish to not pay license fees. If there's interest, I'll post in the future if I see good ideas for such language, and if I have some good ideas of my own I'll post those. This will be a big topic among IP license lawyers for the foreseeable future, as Scalia's opinion left a raft of issues wide open, including the issues about how such language would now be interpreted.

I will later update this post with links to the opinions (I have them via e-mail from Professor Hal Wegner).

UPDATE: Straight from the horse's mouth, here is the slip opinion.

The Court's opinion today reverses the doctrine of the Federal Circuit, the normal appeals court for U.S. patents, that had held that there was no standing for a patent licensee to sue until it had actually infringed the patent or breached the contract. This meant that the licensee had to risk triple damages (for intentional infringement) and other potential problems in order to have a court determine whether the patent was valid or being infringed. This could be harsh, and it has long been argued that such consequences coerce licensees into continuing to pay license fees for products that it has discovered are not really covered by the patent.

Given the fuzziness of the "metes and bounds" of patents, this is a common occurrence. Sometimes the licensee's engineers come up with an alternative design that seems to avoid the patent, but the licensee is too scared of triple damages if the court decides otherwise and sticks with the patented product and paying the license fees. At other times new prior art is discovered or some other research uncovers the probable invalidity of the patent. But enough uncertainty remains that , given the threat of triple damages, the licensee just keeps paying the license fees.

A caveat is that the licensor may have to first "threaten" the licensee somehow that they will take action if the licensee doesn't make the expected payments or introduces a new product not covered by the license. In this case that "threat" took the form of an opinion letter from the licensor that the licensee's new product was covered by the old patent, and thus that the licensee had to pay license fees for the new product

Justice Scalia wrote the opinion for the eight justice majority. He argued that if the only difference between a justiciable controversy (i.e. a case where the plaintiff has standing under "case or controversy" clause of the U.S Constitution) and a non-justiciable one (i.e. no standing to sue) is that the plaintiff chose not to violate the disputed law, then the plaintiff still has standing:

The plaintiff's own action (or inaction) in failing to violate the law eliminates the imminent threat of prosecution, but nonetheless does not eliminate Article III jurisdiction. For example, in Terrace v. Thompson, 263 U. S. 197 (1923), the State threatened the plaintiff with forfeiture of his farm, fines, and penalties if he entered into a lease with an alien in violation of the State's anti-alien land law. Given this genuine threat of enforcement, we did not require, as a prerequisite to testing the validity of the law in a suit for injunction, that the plaintiff bet the farm, so to speak, by taking the violative action.

One of the main purposes of the Declaratory Judgments Act, under which such lawsuits are brought, is to avoid the necessity of committing an illegal act before the case can be brought to court:

Likewise, in Steffel v. Thompson, 415 U. S. 452 (1974), we did not require the plaintiff to proceed to distribute handbills and risk actual prosecution before he could seek a declaratory judgment regarding the constitutionality of a state statute prohibiting such distribution. Id., at 458, 460. As then-Justice Rehnquist put it in his concurrence, "the declaratory judgment procedure is an alternative to pursuit of the arguably illegal activity." Id., at 480. In each of these cases, the plaintiff had eliminated the imminent threat of harm by simply not doing what he claimed the right to do (enter into a lease, or distribute handbills at the shopping center). That did not preclude subject matter jurisdiction because the threat-eliminating behavior was effectively coerced. See Terrace, supra, at 215. 216; Steffel, supra, at 459. The dilemma posed by that coercion "putting the challenger to the choice between abandoning his rights or risking prosecution" is "a dilemma that it was the very purpose of the Declaratory Judgment Act to ameliorate." Abbott Laboratories v.

Gardner, 387 U. S. 136, 152 (1967).

Scalia, who is normally no fan of easy standing, extended this doctrine from disputes with the government to private disputes. For this he used as precedent Altvater v. Freeman:

The Federal Circuit's Gen-Probe decision [the case in which the Federal Circuit established its doctrine which the Supreme Court today reversed] distinguished Altvater on the ground that it involved the compulsion of an injunction. But Altvater cannot be so readily dismissed. Never mind that the injunction had been privately obtained and was ultimately within the control of

the patentees, who could permit its modification. More fundamentally, and contrary to the Federal Circuit's conclusion, Altvater did not say that the coercion dispositive of the case was governmental, but suggested just the opposite. The opinion acknowledged that the licensees had the option of stopping payments in defiance of the injunction, but explained that the consequence of doing so would be to risk "actual [and] treble damages in infringement suits" by the patentees. 319 U. S., at 365. It significantly did not mention the threat of prosecution for contempt, or any other sort of governmental sanction.

Scalia as usual got to the point:

The rule that a plaintiff must destroy a large building, bet the farm, or(as here) risk treble damages and the loss of 80 percent of its business, before seeking a declaration of its actively contested legal rights finds no support in Article III.

Scalia rebutted the argument that under freedom of contract the parties had a right to, and had here, created an "insurance policy" immunizing the licensor from declaratory lawsuits:

Promising to pay royalties on patents that have not been held invalid does not amount to a promise not to seek a holding of their invalidity.

The lessons of this case also apply to copyright and other kinds of IP, albeit in different ways. In copyright one can be exposed to criminal sanctions for infringement so there is an even stronger case for declaratory lawsuits.

The most interesting issue is to what extent IP licensors will be able to "contract around" this holding and thus still be able to immunize themselves from pre-infringement lawsuits. It's possible that IP licensors will still be able to prevent their licensees from suing with the proper contractual language. Licensees on the other hand may want to insist on language that preserves their rights to sue for declaratory judgments on whether the IP they are licensing is valid or on whether they are really infringing it with activities for which they wish to not pay license fees. If there's interest, I'll post in the future if I see good ideas for such language, and if I have some good ideas of my own I'll post those. This will be a big topic among IP license lawyers for the foreseeable future, as Scalia's opinion left a raft of issues wide open, including the issues about how such language would now be interpreted.

I will later update this post with links to the opinions (I have them via e-mail from Professor Hal Wegner).

UPDATE: Straight from the horse's mouth, here is the slip opinion.

Monday, January 01, 2007

It often pays to wait and learn

Alex Tabarrok writes another of his excellent (but far too infrequent) posts, this time about the problem of global warming and the relative merits of delay versus immediate action:

We already know how to use markets to reduce pollution with minimal cost to industry (and minimal economic impact generally). We now need to learn how to apply these lessons on and international level while avoiding the very real threat of the corruption and catastrophic decay of essential industries that comes from establishing new governmental institutions to radically alter their behavior.

There are tons of theories about politics and economics, practically all of them highly oversimplified nonsense. No single person knows more than a miniscule fraction of the knowledge needed to solve global warming. Political debate over technological solutions will get us nowhere. We won't learn much more about creating incentives to reduce greenhouse gases except by creating them and seeing how they work. As with any social experiment, we should start small and with what we already know works well in analogous contexts -- i.e. what we already know about getting the biggest pollution reductions at the smallest costs.

The costs of the markets -- especially the target auction (and expected exchange) prices of carbon dioxide pollution units -- should thus start out small. In that sense, the European approach under the Kyoto Protocol (the European Union Emission Trading Scheme ) provides a good model even though it has been criticized for costing industry almost nothing so far, and correspondingly producing little carbon dioxide reduction so far. So what? We have to learn to crawl before we can learn to walk. The goal, certain fanatic "greens" notwithstanding, is to figure out how to reduce carbon dioxide emissions, not to punish industry or return to pre-industrial economies. Once people and organizations get used to a simple set of incentives, they can be tightened in the future in response to the actual course of global warming, in response to what we learn about global warming, and above all in what we learn from our responses to global warming.

We already know markets -- and perhaps also carefully designed carbon taxes, but as opposed to micro-regulation and getting the law involved in choosing particular technological solutions -- markets can radically reduce specific pollutants if they specific mitigation decisions are left to market participants rather than dictated by government. And we might learn certain mitigation strategies (perhaps this one, for example) that turn out to be superior to radical carbon dioxide reductions.

Let's set up and debug the basics now -- and nothing is more basic to this problem than international forums, agreements, and exchange(s) that include all countries that will be major sources of greenhouse gases over the next century. These institutions must be designed (and this won't be easy!) for minimal transaction costs -- in particular for minimal rent-seeking and minimal corruption. Until we've set up and debugged such a system, it could be far more catastrophic than the projected changes in the weather to impose large costs and create large bureacracies funded by premature "solutions" to the global warming problem.

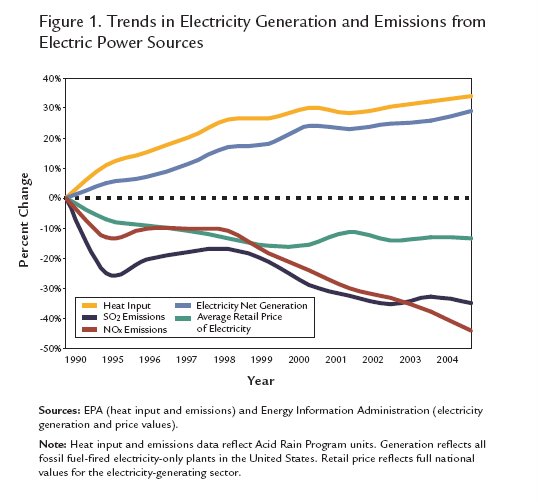

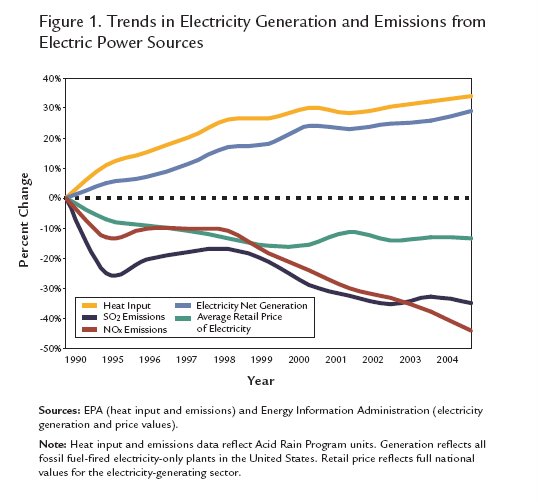

As the accompanying illustrations show, a domestic United States programming that left all decisions beyond the most basic and general of market rules to the market participants -- and thus left the specific decisions to those with the most knowledge, here the electric utility companies, and minimized the threat of the rise of a corrupt bureaucracy -- was able to radically reduce sulfur dioxide and nitrous oxides (acid rain causing) pollution. So much so that few remember that acid rain in the 1970s and 80s was a scare almost as big global warming is today. Scientists plausibly argued that our forests were in imminent danger of demise. By experimenting with, learning about, and then exploiting the right institutions, that major pollution threat was, after methodically working through the initial learning curve, rapidly mitigated.

Global warming is an even bigger challenge than acid rain -- especially its international nature which demands the participation of all major countries -- but we now have the acid rain experience and others to learn from. We don't have to start from scratch and we don't need to implement a crash program. We can start with what worked quite well in the similar case of acid rain and experiment until we have figured out in reality -- not merely in shallow political rhetoric -- what will work well for the mitigation of global warming.

Furthermore, it probably will pay to not impose the big costs until we've learned far more about the scientific nature of the problems (note the plural) and benefits (yes, there are also benefits, and also plural) of the major greenhouse gases, and until the various industries have learned how to efficiently address the wide variety and vast number of unique problems for industry that the general task of reducing carbon dioxide output raises. It's important to note, however, that the scientific uncertainty, while still substantial, is nevertheless far smaller than the uncertainties of political and economic institutions and their costs.

To put it succinctly -- we should not impose costs faster than industry can adapt to them, and we should not develop international institutions faster than we can debug them: otherwise the "solutions" could be far worse than the disease.

Another application of Tabarrok's theory: "the" space program. (Just the fact that people use "the" to refer to what are, or at least should be, a wide variety of efforts, as in almost any other general area of human endeavor, should give us the first big hint that something is very wrong with "it"). For global warming we may be letting our fears outstrip reality; in "the" space program we have let our hopes outstrip reality. Much of what NASA has done over its nearly fifty year history, for example, would have been far more effective and self-sustaining if done several decades later, in a very different way, on a smaller scale, on a much lower budget, and for practical reasons, such as commercial or military reasons, rather than as ephemeral political fancies. The best space development strategy is often to just to wait and learn -- wait until we've developed better technology and wait until we've learned more about what's available up there. Our children will be able to do it far more effectively than we. I understand that such waiting is excrutiatingly painful to die-hard space fans like myself, but all the more reason to beware of deluding ourselves into acting too soon.

In both the global warming and space program activist camps you hear a lot of rot about how "all we need" is "the political will." Utter nonsense. Mostly what we need to do is wait and experiment and learn. When the time is ripe the will is straightforward.

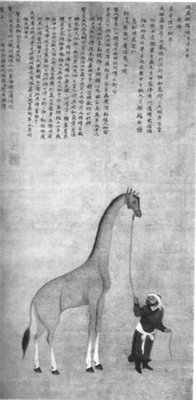

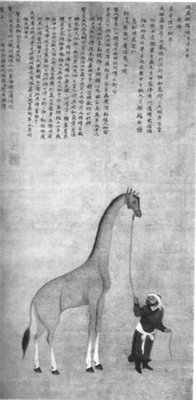

A million-yuan giraffe, brought back to China from East Africa by a Zheng He flotilla in 1414 (click to enlarge). Like NASA's billion-dollar moon rocks, these were not the most cost-efficient scientific acquisitions, but the Emperor's ships (and NASA's rockets) were bigger and grander than anybody else's! In the same year as the entry of this giraffe into China, on the other side of the planet, the Portuguese using a far humbler but more practical fleet took the strategic choke-point of Ceuta from the Muslims. Who would you guess conquered the world's sea trade routes soon thereafter -- tiny Portugal with their tiny ships and practical goals, or vast China with their vast ocean liners engaging in endeavours of glory?

A million-yuan giraffe, brought back to China from East Africa by a Zheng He flotilla in 1414 (click to enlarge). Like NASA's billion-dollar moon rocks, these were not the most cost-efficient scientific acquisitions, but the Emperor's ships (and NASA's rockets) were bigger and grander than anybody else's! In the same year as the entry of this giraffe into China, on the other side of the planet, the Portuguese using a far humbler but more practical fleet took the strategic choke-point of Ceuta from the Muslims. Who would you guess conquered the world's sea trade routes soon thereafter -- tiny Portugal with their tiny ships and practical goals, or vast China with their vast ocean liners engaging in endeavours of glory?

Suppose...that we are uncertain about which environment we are in but the uncertainty will resolve over time. In this case, there is a strong argument for delay. The argument comes from option pricing theory applied to real options. A potential decision is like an option, making the decision is like exercising the option. Uncertainty raises the value of any option which means that the more uncertainty the more we should hold on to the option, i.e. not exercise or delay our decision.I agree wholeheartedly, except to stress that the typical problem is not the binary problem of full delay vs. immediate full action, but one of how much and what sorts of things to do in this decade versus future decades. In that sense, for global warming it seems to me none too early to set up political agreements and markets we will need to incentivize greenhouse gas reductions. Not to immediately and radically cut down emissions of greenhouse gases -- far from it -- but to learn about, experiment with, and debug the institutions we will need to reduce greenhouse emissions without creating even greater political and economic threats. Once these are debugged, but not until then, cutting greenhouse emissions will have a far lower cost than if we panic and soon start naively building large international bureaucracies.

We already know how to use markets to reduce pollution with minimal cost to industry (and minimal economic impact generally). We now need to learn how to apply these lessons on and international level while avoiding the very real threat of the corruption and catastrophic decay of essential industries that comes from establishing new governmental institutions to radically alter their behavior.

There are tons of theories about politics and economics, practically all of them highly oversimplified nonsense. No single person knows more than a miniscule fraction of the knowledge needed to solve global warming. Political debate over technological solutions will get us nowhere. We won't learn much more about creating incentives to reduce greenhouse gases except by creating them and seeing how they work. As with any social experiment, we should start small and with what we already know works well in analogous contexts -- i.e. what we already know about getting the biggest pollution reductions at the smallest costs.

The costs of the markets -- especially the target auction (and expected exchange) prices of carbon dioxide pollution units -- should thus start out small. In that sense, the European approach under the Kyoto Protocol (the European Union Emission Trading Scheme ) provides a good model even though it has been criticized for costing industry almost nothing so far, and correspondingly producing little carbon dioxide reduction so far. So what? We have to learn to crawl before we can learn to walk. The goal, certain fanatic "greens" notwithstanding, is to figure out how to reduce carbon dioxide emissions, not to punish industry or return to pre-industrial economies. Once people and organizations get used to a simple set of incentives, they can be tightened in the future in response to the actual course of global warming, in response to what we learn about global warming, and above all in what we learn from our responses to global warming.

We already know markets -- and perhaps also carefully designed carbon taxes, but as opposed to micro-regulation and getting the law involved in choosing particular technological solutions -- markets can radically reduce specific pollutants if they specific mitigation decisions are left to market participants rather than dictated by government. And we might learn certain mitigation strategies (perhaps this one, for example) that turn out to be superior to radical carbon dioxide reductions.

Let's set up and debug the basics now -- and nothing is more basic to this problem than international forums, agreements, and exchange(s) that include all countries that will be major sources of greenhouse gases over the next century. These institutions must be designed (and this won't be easy!) for minimal transaction costs -- in particular for minimal rent-seeking and minimal corruption. Until we've set up and debugged such a system, it could be far more catastrophic than the projected changes in the weather to impose large costs and create large bureacracies funded by premature "solutions" to the global warming problem.

As the accompanying illustrations show, a domestic United States programming that left all decisions beyond the most basic and general of market rules to the market participants -- and thus left the specific decisions to those with the most knowledge, here the electric utility companies, and minimized the threat of the rise of a corrupt bureaucracy -- was able to radically reduce sulfur dioxide and nitrous oxides (acid rain causing) pollution. So much so that few remember that acid rain in the 1970s and 80s was a scare almost as big global warming is today. Scientists plausibly argued that our forests were in imminent danger of demise. By experimenting with, learning about, and then exploiting the right institutions, that major pollution threat was, after methodically working through the initial learning curve, rapidly mitigated.

Global warming is an even bigger challenge than acid rain -- especially its international nature which demands the participation of all major countries -- but we now have the acid rain experience and others to learn from. We don't have to start from scratch and we don't need to implement a crash program. We can start with what worked quite well in the similar case of acid rain and experiment until we have figured out in reality -- not merely in shallow political rhetoric -- what will work well for the mitigation of global warming.

Furthermore, it probably will pay to not impose the big costs until we've learned far more about the scientific nature of the problems (note the plural) and benefits (yes, there are also benefits, and also plural) of the major greenhouse gases, and until the various industries have learned how to efficiently address the wide variety and vast number of unique problems for industry that the general task of reducing carbon dioxide output raises. It's important to note, however, that the scientific uncertainty, while still substantial, is nevertheless far smaller than the uncertainties of political and economic institutions and their costs.

To put it succinctly -- we should not impose costs faster than industry can adapt to them, and we should not develop international institutions faster than we can debug them: otherwise the "solutions" could be far worse than the disease.

Another application of Tabarrok's theory: "the" space program. (Just the fact that people use "the" to refer to what are, or at least should be, a wide variety of efforts, as in almost any other general area of human endeavor, should give us the first big hint that something is very wrong with "it"). For global warming we may be letting our fears outstrip reality; in "the" space program we have let our hopes outstrip reality. Much of what NASA has done over its nearly fifty year history, for example, would have been far more effective and self-sustaining if done several decades later, in a very different way, on a smaller scale, on a much lower budget, and for practical reasons, such as commercial or military reasons, rather than as ephemeral political fancies. The best space development strategy is often to just to wait and learn -- wait until we've developed better technology and wait until we've learned more about what's available up there. Our children will be able to do it far more effectively than we. I understand that such waiting is excrutiatingly painful to die-hard space fans like myself, but all the more reason to beware of deluding ourselves into acting too soon.

In both the global warming and space program activist camps you hear a lot of rot about how "all we need" is "the political will." Utter nonsense. Mostly what we need to do is wait and experiment and learn. When the time is ripe the will is straightforward.

A million-yuan giraffe, brought back to China from East Africa by a Zheng He flotilla in 1414 (click to enlarge). Like NASA's billion-dollar moon rocks, these were not the most cost-efficient scientific acquisitions, but the Emperor's ships (and NASA's rockets) were bigger and grander than anybody else's! In the same year as the entry of this giraffe into China, on the other side of the planet, the Portuguese using a far humbler but more practical fleet took the strategic choke-point of Ceuta from the Muslims. Who would you guess conquered the world's sea trade routes soon thereafter -- tiny Portugal with their tiny ships and practical goals, or vast China with their vast ocean liners engaging in endeavours of glory?

A million-yuan giraffe, brought back to China from East Africa by a Zheng He flotilla in 1414 (click to enlarge). Like NASA's billion-dollar moon rocks, these were not the most cost-efficient scientific acquisitions, but the Emperor's ships (and NASA's rockets) were bigger and grander than anybody else's! In the same year as the entry of this giraffe into China, on the other side of the planet, the Portuguese using a far humbler but more practical fleet took the strategic choke-point of Ceuta from the Muslims. Who would you guess conquered the world's sea trade routes soon thereafter -- tiny Portugal with their tiny ships and practical goals, or vast China with their vast ocean liners engaging in endeavours of glory?