Pages

Thursday, February 22, 2007

Partially self-replicating machines being built

Nevertheless, I find these devices quite interesting from a theoretical point of view. The complexity of design needed to make these machines fully self-replicating may give us some idea of how improbable it was for self-replicating life to originate.

In a hypothetical scenario where one lacked ready access to industrial civilization -- after a nuclear war or in a future space industry, for example -- machines like these could be quite useful for bootstrapping industry with a bare minimum of capital equipment. For similar reasons, it's possible that machines like these might prove useful in some developing world areas -- and indeed that seems to be a big goal of these projects. Add in open source code made in the first world but freely available in the third, and this opens up the intriguing possibility of a large catalog of parts that can be freely downloaded and cheaply printed. Also useful for this goal is to reduce the cost of the remaining parts not replicated by the machine -- although even, for example, $100 worth of parts is more than a year's income for many people in the developing world, and the polymer materials that can be most usefully printed are also rather expensive. But ya gotta start somewhere.

RepRap is a project to build a 3D printer that can manufacture all of its expensive parts. Although they call this "self-replicating", it might be better to think of their goal as an open-source digital smithy using plastics instead of iron. To achieve their goal the machine need not produce "widely-available [and inexpensive] bought-in parts (screws, washers, microelectronic chips, and the odd electric motor)." That's quite a shortcut as such parts, despite being cheap in first world terms, require a very widespread and complex infrastructure to manufacture and are rather costly by developing country standards. The machine also does not attempt to assemble itself -- extensive manual assembly is presumed. RepRap makes a practical intermediate goal between today's 3D printers and more complete forms of self-replication that would, for example, include all parts of substantial weight, would self-assemble, or both. The project is associated with some silly economics, but I'm hardly complaining as long as the bad ideas result in such good projects. So far RepRap has succeeded in producing "a complete set of parts for the screw section of the extruder."

Similar to RepRap, but apparently not quite as far along, is Tommelise. Their goal is that a craftsman can build a new Tommelise using an old Tommelise and $100-$150 in parts plus the cost of some extra tools.

A less ambitious project that already works -- an open source fab, but with no goal of making its own parts -- is the Fab@Home project. Using Fab@Home folks have already printed out stuff in silicone, cake icing, chocolate, and more.

Meanwhile, in an economically attractive (but not self-replicating) application these guys want to print out bespoke bones based on CT or MRI scans.

Tuesday, February 06, 2007

Falsifiable design: a methodology for evaluating theoretical technologies

We lack a good discipline of theoretical technology. As a result, discussion of such technologies among scientists and engineers and laypeople alike often never gets beyond naive speculation, which ranges from dismissing or being antagonistic to such possibilities altogether to proclamations that sketchy designs in hard-to-predict areas of technology are easy and/or inevitable and thus worthy of massive attention and R&D funding. At one extreme are recent news stories, supposedly based on the new IPCC report on global warming, that claim that we will not be able to undo the effects of global warming for at least a thousand years. At the other extreme are people like Eric Drexler and Ray Kurzweil who predict runaway technological progress within our lifetimes, allowing us to not only easily and quickly reverse global warming but also conquer death, colonize space, and much else. Such astronomically divergent views, each held by highly intelligent and widely esteemed scientists and engineers, reflect vast disagreements about the capabilities of future technology. Today we lack good ways for proponents of such views to communicate in a disciplined way with each other or to the rest of us about such claims and we lack good ways as individuals, organizations, and as a society to evaluate them.

Sometimes descriptions of theoretical technologies lead to popular futuristic movements such as extropianism, transhumanism, cryonics, and many others. These movements make claims, often outlandish but not refuted by scientific laws, that the nature of technologies in the future should influence how we behave today. In some cases this leads to quite dramatic changes in outlook and behavior. For example, people ranging from baseball legend Ted Williams to cryptography pioneer and nanotechnologist Ralph Merkle have spent up to a hundred thousand dollars or more to have their bodies frozen in the hopes that future technologies will be able to fix the freezing damage and cure whatever killed them. Such beliefs can radically alter a person's outlook on life, but how can we rationally evaluate their credibility?

Some scientific fields have developed that inherently involve theoretical technology. For example, SETI (and less obviously its cousin astrobiology) inherently involve speculation about what technologies hypothetical aliens might possess.

Eric Drexler called the study of such theoretical technologies "exploratory engineering" or "theoretical applied science." Currently futurists tend to evaluate such designs based primarily on their mathematical consistency with known physical law. But mere mathematical consistency with high-level abstractions is woefully insufficient, except in computer science or where only the simplest of physics is involved, for demonstrating the future workability or desirability of possible technologies. And it falls far short of the criteria engineers and scientists generally use to evaluate each others' work.

Traditional science and engineering methods are ill-equipped to deal with such proposals. As "critic" has pointed out, both demand near-term experimentation or tests to verify or falsify claims about the truth of a theory or whether a technology actually works. Until such an experiment has occurred a scientific theory is a mere hypothesis, and until a working physical model, or at least a description sufficient to create such has been produced, a proposed technology is merely theoretical. Scientists tend to ignore theories that they have no prospect of testing and engineers tend to ignore designs that have no imminent prospect of being built, and in the normal practice of these fields this is a quite healthy attitude. But to properly evaluate theoretical technologies we need ways other than near-term working models to reduce the large uncertainties in such proposals and to come to some reasonable estimates of their worthiness and relevance to R&D and other decisions we make today.

The result of these divergent approaches is that when scientists and engineers talk to exploratory engineers they talk past each other. In fact, neither side has been approaching theoretical technology in a way that allows it to be properly evaluated. Here is one of the more productive such conversations -- the typical one is even worse. The theoreticians' claims are too often untestable, and the scientists and engineers too often demand inappropriately that descriptions be provided that would allow one to actually build the devices with today's technology.

To think about how we might evaluate theoretical technologies, we can start by looking a highly evolved system that technologists have long used to judge the worthiness of new technological ideas -- albeit not without controversy -- the patent system.

The patent system sets up several basic requirements for proving that an invention is worthy enough to become a property right: novelty, non-obviousness, utility, and enablement. (Enablement is also often called "written description" or "sufficiency of disclosure", although sometimes these are treated as distinct requirements that we need not get into for our purposes). Novelty and non-obviousness are used to judge whether a technology would have been invented anyway and are largely irrelevant for our purposes here (which is good news because non-obviousness is the source of most of the controversy about patent law). To be worthy of discussion in the context of, for example, R&D funding decisions, the utility of a theoretical technology, if or when in the future it is made to work, should be much larger than that required for a patent -- the technology if it works should be at least indirectly of substantial expected economic importance. I don't expect meeting this requirement to be much of a problem, as futurists usually don't waste much time on the economically trivial, and it may only be needed to distinguish important theoretical technologies from those merely found in science fiction for the purposes of entertainment.

The patent requirement that presents the biggest problem for theoretical technologies is enablement. The inventor or patent agent must write a description of the technology detailed enough to allow another engineer in the field to, given a perhaps large but not infinite amount of resources, build the invention and make it work. If the design is not mature enough to build -- if, for example, it requires further novel and non-obvious inventions to build it and make it work -- the law deems it of too little importance to entitle the proposer to a property right in it. Implementing the description must not require so much experimentation that it becomes a research project in its own right.

An interesting feature of enablement law is the distinction it makes between fields that are considered more predictable or less so. Software and (macro-)mechanical patents generally fall under the "predictable arts" and require must less detailed description to be enabling. But biology, chemistry, and many other "squishier" areas are much less predictable and thus require far more detailed description to be considered enabling.

Theoretical technology lacks enablement. For example, no astronautical engineer today can take Freeman Dyson's description of a Dyson Sphere and go out and build one, even given a generous budget. No one has been able to take Eric Drexler's Nanosystems and go out and build a molecular assembler. From the point of view of the patent system and of typical engineering practice, these proposals either require far more investment than is practical, or far have too many engineering uncertainties and dependencies on other unavailable technology to build them, or both, and thus are not worth considering.

For almost all scientists and engineers, the lack of imminent testability or enablement puts such ideas out of their scope of attention. For the normal practice of science and engineering this is healthy, but it can have quite unfortunate consequences when decisions about what kinds of R&D to fund are made or about how to respond to long-term problems. As a society we must deal with long-term problems such as undesired climate change. To properly deal with such problems we need ways to reason about what technologies may be built to address them over the spans of decades, centuries, or even longer over which we reason about and expect to deal with such problems. For example, the recent IPCC report on global warming has projected trends centuries in the future, but has not dealt in any serious or knowledgeable way with how future technologies might either exacerbate or ameliorate such trends over such a long span of time -- even though the trends they discuss are primarily caused by technologies in the first place.

Can we relax the enablement requirement to create a new system for evaluating theoretical technologies? We might call the result a "non-proximately enabled invention" or a "theoretical patent." [UPDATE: note that this is not a proposal for an actual legally enforceable long-term patent. This is a thought experiment for the purposes of seeing to what extent patent-like criteria can be used for evaluating theoretical technology]. It retains (and even strengthens) the utility requirement of a patent, but it relaxes the enablement requirement in a disciplined way. Instead of enabling a fellow engineer to build the device, the description must be sufficient to enable an research project -- a series of experiments and tests that would if successful lead to a working invention, and if unsuccessful would serve to demonstrate that the technology as proposed probably can't be made to work.

It won't always be possible to test claims about theoretical designs. They may be of a nature as to require technological breakthroughs to even test their claims. In many cases this will be due the lack of appreciation on the part of the theoretical designer of the requirement that such designs be testable. In some cases it will be the lack of skill or imagination of the theoretical designer to fail to be able to come up with such tests. In some cases it will be because the theoretical designer refuses to admit the areas of uncertainty in the design and thus denies the need for experiment to reduce these uncertainties. In some cases it may just be inherent in the radically advanced nature of the technology being studied that there is no possible way to test it without making other technology advances. Regardless of the reason the designs are untestable, these designs cannot be considered to be anything but entertaining science fiction. In only the most certain of areas -- mostly just in computer science and in the simplest and most verified of physics -- should mathematically valid but untested claims be deemed credible for the purposes of making important decisions.

Both successful and unsuccessful experiments that reduce uncertainty are valuable. Demonstrating that the technology can work as proposed allows lower-risk investments in it to be made sooner, and leads to decisions better informed about the capabilities of future technology. Demonstrating that it can't work as proposed prevents a large amount of money from being wasted trying to build it and prevents decisions from being made today based on the assumption that it will work in the future.

The distinction made by patent systems between fields that are more versus less predictable becomes even more important for theoretical designs. In computer science the gold standard is mathematical proof rather than experiment, and given such proof no program of experiment need be specified. Where simple physics is involved, such as the orbital mechanics used to plan space flights, the uncertainty is also usually very small and proof based on known physical laws is generally sufficient to show the future feasibility of orbital trajectories. (Whether the engines can be built to achieve such trajectories is another matter). Where simple scaling processes are involved (e.g. scaling up rockets from Redstone sized to Saturn sized) uncertainties are relatively small. Thus, to take our space flight example, the Apollo program had a relatively low uncertainty well ahead of time, but it was unusual in this regard. As soon as we get into dependency on new materials (e.g. the infamous "unobtainium" material stronger than any known materials), new chemical reactions or new ways of performing chemical reactions, and so on, things get murkier far more quickly, and it is essential to good theoretical design that these uncertainties be identified and that experiments to reduce them be designed.

In other words, the designers of a theoretical technology in any but the most predictable of areas should identify its assumptions and claims that have not already been tested in a laboratory. They should design not only the technology but also a map of the uncertainties and edge cases in the design and a series of such experiments and tests that would progressively reduce these uncertainties. A proposal that lacks this admission of uncertainties coupled with designs of experiments that will reduce such uncertainties should not be deemed credible for the purposes of any important decision. We might call this requirement a requirement for a falsifiable design.

Falsifiable design resembles the systems engineering done with large novel technology programs, especially those that require large amounts of investment. Before making the large investments tests are conducted so that the program, if it won't actually work, will "fail early" with minimal loss, or will proceed with components and interfaces that actually work. Theoretical design must work on the same principle, but on longer time scales and with even greater uncertainty. The greater uncertainties involved make it even more imperative that uncertainties be resolved by experiment.

The distinction between a complete blueprint or enabling description and a description for the purposes of enabling falsifiability of a theoretical design is crucial. A patent enablement or an architectural blueprint is an a description of how to build. An enablement of falsifiability is a description of if it were built -- and we don't claim to be describing or even to know how to build it -- there is how we believe it would function, here are the main uncertainties on how it would function, and here are the experiments that can be done to reduce these uncertainties.

Good theoretical designers should be able to recognize the uncertainties in their own and others' designs. They should be able to characterize the uncertainties in such a way as to that evaluators to assign probabilities to the uncertainties and to decide what experiments or tests can most easily reduce the uncertainties. It is the recognition of uncertainties and the plan of uncertainty reduction, even more than the mathematics demonstrating the consistency of the design with physical law, that will enable the theoretical technology for the purposes, not of building it today, but of making important decisions today based on to what degree we can reasonably expect it to be built and produce value in the future.

Science fiction is an art form in which the goal is to suspend the reader's disbelief by artfully avoiding mention of the many uncertainties involved in the wonderful technologies. To be useful and credible -- to achieve truth rather than just the comfort or entertainment of suspension of disbelief -- theoretical design must do the opposite -- it must highlight the uncertainties and emphasize near-term experiments that can be done to reduce them.

Monday, February 05, 2007

Mining the vasty deep (ii)

Beyond Oil

The first installment of this series looked at the most highly developed offshore extraction industry, offshore oil. A wide variety of minerals besides oil are extracted on land. As technology improves, and as commodity prices remain high, more minerals are being extracted from beneath the sea. The first major offshore mineral beyond oil, starting in the mid 1990s, was diamond. More recently, there has been substantial exploration, research, and investment towards the development of seafloor massive sulfide (SMS) deposits, which include gold, copper, and several other valuable metals in high concentrations. Today we look at mining diamonds from the sea.Diamond

De Beers' mining ship for their first South African marine diamond mine

De Beers' mining ship for their first South African marine diamond mine The first major area after oil was opened up by remotely operated vehicles (ROVs) in the 1990s -- marine diamond mining. The current center of this activity is Namibia, with offshore reserves estimated at more than 1.5 billion carats. The companies mining or planning to mine the Namibian sea floor with ROVs include Nambed (a partnership between the government and DeBeers, and the largest Namibian diamond mining company), Namco (which has been mining an estimated 3 million carats since discovering its subsea field in 1996), Diamond Fields Intl. (which expects to mine 40,000 carats a year from the sea floor), and Afri-Can (another big concession holder which is currently exploring its concessions and hopes to ramp up to large-scale undersea operations). Afri-Can has been operating a ship and crawler (ROV) that vacuums up 50 tons per hour gravel from a sea floor 90 to 120 meters below the surface and process the gravel for the diamonds. They found 7.2 carats of diamond per 100 cubic meters of gravel, which means the field is probably viable and further sampling is in order.

DeBeers is also investing in a diamond mine in the seas off South Africa. A retrofitted ship will be used featuring a gravel processing planet capable of sorting diamonds from 250 tons of gravel per hour. It is hoped the ship will produce 240,000 carats a year when fully operational.

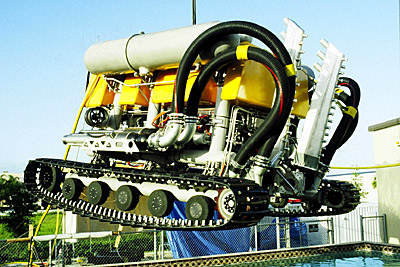

The ship will engage in

horizontal mining, utilising an underwater vehicle mounted on twin Caterpillar tracks and equipped with an anterior suction system...The crawler's suction systems are equipped with water jets to loosen seabed sediments and sorting bars to filter out oversize boulders. The crawler is fitted with highly accurate acoustic seabed navigation and imaging systems. On board the vessel will be a treatment plant consisting of a primary screening and dewatering plant, a comminution mill sector followed by a dense media separation plant and finally a diamond recovery plant.In other words, a ROV will vacuum diamond-rich gravel off the sea floor, making a gravel slurry which is then piped to the ship, where it is then sifted for the diamonds. This is similar to the idea of pumping oil from the sea floor onto a FPSO -- a ship which sits over the wells and processes the oil, separates out the water, stores it, and offloads it onto visting oil tankers. However with marine diamond mining, instead of a fixed subsea tree capping and valving a pressurized oil field, a mobile ROV vacuums up a gravel slurry to be pumped through hoses to the ship.

By contrast to this horizontal marine mining, vertical mining "uses a 6 to 7 meter diameter drill head to cut into the seabed and suck up the diamond bearing material from the sea bed."

Coming soon: some new startup companies plan to mine extinct black smokers for copper, gold, and other valuable metals.

Thursday, February 01, 2007

Mining the vasty deep (i)

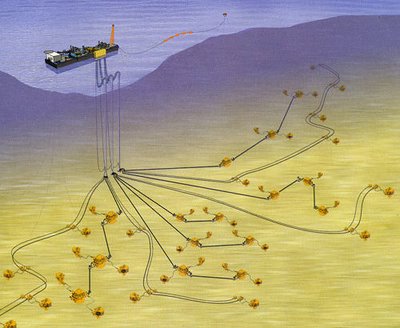

Layout of the Total Girassol field, off the shore of Angola in 1,400 meters of water, showing the FPSO ship, risers, flow lines, and subsea trees (well caps and valves on the sea floor).

Why peak oil is nonsense

There are any number of reasons why peak oil is nonsense, such as tar sands and coal gasification. Perhaps the most overlooked, however, is that up until now oil companies have focused on land and shallow seas, which are relatively easy to explore. But there is no reason to expect that oil, which was largely produced by oceans in the first place (especially by the precipitation of dead plankton), is any more scarce underneath our eon's oceans as it is under our lands. Oceans cover over two-thirds of our planet's surface, and most of that is deep water (defined in this series as ocean floor 1,000 meters or more below the surface). A very large fraction of the oil on our planet remains to be discovered in deep water. Given a reasonable property rights regime enforced by major developed world powers, this (along with the vast tar sands in Canada) means not only copious future oil, but that this oil can mostly come from politically stable areas.

The FPSO, riser towers and flow lines in Total's Girassol field off of Angola.

Some perspective, even some purely theoretical perspective, is in order. If we look at the problem at the scale of the solar system, we find that hydrocarbons are remarkably common -- Titan has clouds and lakes of ethane and methane, for example, and there are trillions of tonnes of hydrocarbons, at least, to be found on comets and in the atmospheres and moons of the gas giant planets. What is far more scarce in the solar system is free oxygen. If there will ever be a "peak" in the inputs to hydrocarbon combustion in the solar system it will be in free oxygen -- which as a natural occurence is extremely rare beyond Earth's atmosphere, and is rather expensive to make artificially.

A deep sea "robot hand" on a ROV (Remotely Operated Vehicle) more often than not ends in an attachment specific for the job. Here, a subsea hydraulic grinder and a wire brush.

What's even more scarce, however, are habitable planets that keep a proper balance between greenhouse gases and sunlight. Venus had a runaway greenhouse, partly from being closer to the sun and partly because of increased carbon dioxide in its atmosphere which acts like an inulating blanket, preventing heat from radiating away quickly enough. The result is that Venus' surface temperature is over 400 C (that's over 750 fahrenheit), hotter than the surface of Mercury. On Mars most of its atmosphere escaped, due to its low gravity, and its water, and eventually even much of its remaining carbon dioxide, froze, again partly due the greater distance from the sun and partly due the low level of greenhouse gases in its generally thin atmosphere.

Hydraulic subsea bandsaw. Great for cutting pipes as shown here.

So far, the Earth has been "just right", but the currently rapidly rising amount of carbon dioxide and methane in our atmosphere, largely from the hydrocarbons industries, is moving our planet in the direction of Venus. Nothing as extreme as Venus is in our foreseeable future, but neither will becoming even a little bit more like Venus be very pleasant for most of us. The real barrier to maintaining our hydrocarbon-powered economy is thus not "peak oil", but emissions of carbon dioxide and methane with the resulting global warming. That peak oil is nonsense makes global warming even more important problem to solve, at least in the long term. We won't avoid it by oil naturally becoming too expensive; instead we must realize that our atmosphere is a scarce resource and make property out of it, as we did with "acid rain."

This series isn't mainly about oil or the atmosphere, however; it is about the technology (and perhaps some of the politics and law) about extracting minerals generally from the deep sea. Oil is the first deep sea mineral to be extracted from the sea on a large scale. The rest of this article fill look at some of the technology used to recover offshore oil, especially in deep water. Future posts in this series will look at mining other minerals off the ocean floor.

Painting of the deepwater (1,350 meters) subsea trees at the Total Girassol field. They're not really this close together.

FPSOs

Once the wells have been dug, the main piece of surface equipment that remains on the scene, especially in deep water fields where pipelines to the seashore are not effective, is the Floating Production, Storage, and Offloading (FPSO) platform. The FPSO is usually an oil tanker that has been retrofitted with special equipment, which often injects water into wells, pumps the resulting oil from the sea floor, performs some processing on the oil (such as removing seawater and gases that have come out with the oil), stores it, and then offloads it to oil tankers, which ship it to market for refining into gasoline and other products. The FPSO substitutes far from shore and in deep water for pipes going directly to shore (the preferred technique for shallows wells close to a politically friendly shoreline).

Many billions of dollars typically are invested in developing a single deep water oil field, with hundreds of millions spent on the FPSO alone. According to Wikipedia, the world's largest FPSO is operated by Exxon Mobil near Total's deep water field off Angola: "The world's largest FPSO is the Kizomba A, with a storage capacity of 2.2 million barrels. Built at a cost of over US$800 million by Hyundai Heavy Industries in Ulsan, Korea, it is operated by Esso Exploration Angola (ExxonMobil). Located in 1200 meters (3,940 ft) of water at Deepwater block 15,200 statute miles (320 km) offshore in the Atlantic Ocean from Angola, West Africa, it weighs 81,000 tonnes and is 285 meters long, 63 meters wide, and 32 meters high ((935 ft by 207 ft by 105 ft)."

ROVs

Today's ROVs (Remotely Operated Vehicles) go far beyond the little treasure-recovery sub you may have seen in "Titanic." There are ROVs for exploration, rescue, and a wide variety of other undersea activities. Most interesting are the wide variety of ROVs used for excavation -- for dredging channels, for trenching, laying, and burying pipe, and for maintaining the growing variety of undersea equipment. Due to ocean-crossing cables and deep sea oil fields, it is now common for ROVs to conduct their work in thousands of meters of water, far beyond the practical range of divers.

A grab excavator ROV.

It should be noted that in contrast to space vehciles, where teleprogramming via general commands is the norm, and often involves long time delays between the commands being sent and the results being known to the spacecraft's operators, with undersea operations real-time interaction is the norm. Due to operator fatigue and the costs of maintaining workers on offshore platforms, research is being done on fully automating certain undersea tasks, but the current state of the art remains a human closely in the loop. The costs of maintaining workers on platforms is vastly lower than the cost of maintaining an astronaut in space, so the problem of fully automating undersea operations is correspondingly less important. Nevertheless, many important automation problems, such as the simplification of operations, have had to be solved in order to make it possible for ROVs to replace divers at all.

A ROV for digging trenches, used when laying undersea cable or pipe.

Another important consideration is that ROVs depend on their tethers to deliver not only instructions but power. An untethered robot lacks power to perform many required operations, especially excavation. At sea as long as the tether is delivering power it might as well deliver real-time interactive instructions and sensor data, i.e. teleoperation as well.

Trenching and other high-power ROVs are usually referred to as "work class." There are over 400 collectively worth more than $1.5 billion in operation today and their numbers are increasingly rapidly.

Tankers are big, but storms can be bigger.

Harsh Conditions

Besides deep water and the peril of storms anywhere at sea, many offshore fields operate under other kinds of harsh conditions. The White Rose and Sea Rose fields of Newfoundland start by excavating "glory holes" dug down into the sea floor to protect the seafloor against icebergs which can project all the way to the fairly shallow sea floor. Inside these holes the oil outflow and fluid injection holes themselves are dug and capped with subsea trees (valves). The drill platform, FPSO, and some of the other equipment has been reinforced to protect against icebergs.In future installments, I'll look at diamond mining and the startups that plan to mine the oceans for copper, gold, and other minerals.