Pages

Saturday, December 10, 2011

Short takes

--------

Is Netflix management really stupid? To summarize Megan McCardle, no: under copyright law, DVDs are covered by the first sale rule -- once you buy a DVD, you can't make a copy, but you can sell, rent or give away the DVD itself. Streaming, on the other hand, essentially involves making a copy, and you can't do it legally without the copyright owner's permission. So to stream the most demanded content, you usually need the permission of owners, and thus have to pay what they demand.

It thus makes complete sense that Netflix has to charge high prices for streaming -- because for the kind of content they want to stream (i.e. the copyrights that are so valuable that their owners bother to prevent the content from being distributed on YouTube), content owners are demanding revenues similar to what they are accustomed to via cable.

I'd add that the first sale rule also explains why Netflix, contrary to its name, originally succeeded in out-competing a gaggle of Internet video streaming companies by the stone-age method of shipping DVDs by mail. The main thing Netflix may have done wrong was, after succeeding in pivoting from Internet streaming to mailing DVDs, going back to their original goal in a way that set false expectations about prices (i.e. that streaming prices would be closer to DVD rental prices than to cable TV). Probably what happened is that Netflix CEO Reed Hastings thought he could use his mail-order rental business as leverage to negotiate lower prices with copyright owners, but this strategy did not succeed.

It also makes sense that Netflix (and their streaming competitors) lack licensed content due to copyright owners' long-standing aversion to Internet streaming. All this was happening to Netflix's video-streaming competitors long before Netflix's much more recent emphasis on that business. Netflix apparently hasn't, after all, solved the institutional problem that their DVD-shipping model worked around.

---------

Bit gold and I make brief appearances in Wired's Bitcoin article.

---------

Water remains fun. Digital fountains show how precisely drops can be located and timed:

This one is a bit different, drops fall with uniform regularity but are used to display light:

Tuesday, July 05, 2011

Of wages and money: cost as a proxy measure of value

Nevertheless, as we shall see, using labor, or more generally cost, as a measure of value is a common strategy of our institutions and in itself often quite valuable thing to do. How does this come about? The key to the puzzle is Yoram Barzel's idea of a proxy measure, which I have described as follows:

The process of determining the value of a product from observations is necessarily incomplete and costly. For example, a shopper can see that an apple is shiny red. This has some correlation to its tastiness (the quality a typical shopper actually wants from an apple), but it's hardly perfect. The apple's appearance is not a complete indicator -- an apple sometimes has a rotten spot down inside even if the surface is perfectly shiny and red. We call an indirect measure of value -- for example the shininess, redness, or weight of the apple -- a proxy measure. In fact, all measures of value, besides prices in an ideal market, are proxy measures -- real value is subjective and largely tacit.

Such observations also come at a cost. It may take some time to sort through apples to find the shiniest and reddest ones, and meanwhile the shopper bruises the other apples. It costs the vendor to put on a fake shiny gloss of wax, and it costs the shopper because he may be fooled by the wax, and because he has to eat wax with his apple. Sometimes these measurement costs comes about just from the imperfection of honest communication. In other cases, such as waxing the apple, the cost occurs because rationally self-interested parties play games with the observable...

Cost can usually be measured far more objectively than value. As a result, the most common proxy measures are various kinds of costs. Examples include:

(a) paying for employment in terms of time worked, rather than by quantity produced (piece rates) or other possible measures. Time measures sacrifice, i.e. the cost of opportunities foregone by the employee.

(b) most numbers recorded and reported by accountants for assets are costs rather than market prices expected to be recovered by the sale of assets.

(c) non-fiat money and collectibles obtain their value primarily from their scarcity, i.e. their cost of replacement.

(From "Measuring Value").

The proxy measure that dominates most of our lives is the time wage:

To create anything of value requires some sacrifice. To successfully contract we must measure value. Since we can’t, absent a perfect exchange market, directly measure the economic value of something, we may be able to estimate it indirectly by measuring something else. This something else anchors the performance – it gives the performer an incentive to optimize the measured value. Which measures are the most appropriate anchors of performance? Starting in Europe by the 13th century, that measure was increasingly a measure of the sacrifice needed to create the desired economic value.

This is hardly automatic – labor is not value. A bad artist can spend years doodling, or a worker can dig a hole where nobody wants a hole. Arbitrary amounts of time could be spent on activities that do not have value for anybody except, perhaps, the worker himself. To improve the productivity of the time rate contract required two breakthroughs: the first, creating the conditions under which sacrifice is a better estimate of value than piece rate or other measurement alternatives, and second, the ability to measure, with accuracy and integrity, the sacrifice.

Piece rates measure directly some attribute of a good or service that is important to its value – its quantity, weight, volume, or the like -- and then fix a price for it. Guild regulations which fixed prices often amounted to creating piece rates. Piece rates seem the ideal alternative for liberating workers, but they suffer for two reasons. First, the outputs of labor depend not only on effort, skills, etc. (things under control of the employee), but things out of control of the employee. The employee wants something like insurance against these vagaries of the work environment. The employer, who has more wealth and knowledge of market conditions, takes on these risks in exchange for profit.

In an unregulated commodity market, buyers can reject or negotiate downwards the price of poor quality goods. Sellers can negotiate upwards or decline to sell. With piece rate contracts, on the other hand, there is a fixed payment for a unit of output. Thus second main drawback to piece rates is that they motivate the worker to put out more quantity at the expense of quality. This can be devastating. The tendency of communist countries to pay piece rates, rather than hourly rates, is one reason that, while the Soviet bloc’s quantity (and thus the most straightforward measurements of economic growth) was able to keep up with the West, quality did not (thus the contrast, for example, between the notoriously ugly and unreliable Trabant of East Germany and the BMWs, Mercedes, Audi and Volkswagens of West Germany).

Thus with the time-rate wage the employee is insured against vagaries of production beyond his control, including selling price fluctuations (in the case of a market exchange), or variation in the price or availability of factors of production (in the case of both market exchange or piece rates). The employer takes on these risks, while at the same time through promotion, raises, demotions, wage cuts or firing retaining incentives for quality employee output.

Besides lacking implicit insurance for the employee, another limit to market purchase of each worker’s output is that it can be made prohibitively costly by relationship-specific investments. These investments occur when workers engage in interdependent production -- as the workers learn the equipment or adapt to each other. Relationship-specific investments can also occur between firms, for example building a cannon foundry next to an iron mine. These investments, when combine with the inability to write long-term contracts that account for all eventualities, motivate firms to integrate. Dealing with unspecified eventualities then becomes the right of the single owner. This incentive to integrate is opposed by the diseconomies of scale in a bureaucracy, caused by the distribution of knowledge, which market exchange handles much better. These economic tradeoffs produce observed distributions of firm sizes in a market, i.e. the number of workers involved in an employment relationship instead of selling their wares directly on a market.

The main alternative to market exchange of output, piece rate, or coerced labor (serfdom or slavery) consists of the employers paying by sacrifice -- by some measure of the desirable things the employee forgoes to pursue the employer’s objectives. An hour spent at work is an hour not spent partying, playing with the children, etc. For labor, this “opportunity cost” is most easily denominated in time – a day spent working for the employer is a day not spent doing things the employee would, if not for the pay, desire to do.

Time doesn’t specify costs such as effort and danger. These have to be taken into account by an employee or his union when evaluating a job offer. Worker choice, through the ability to switch jobs at much lower costs than with serfdom, allows this crucial quality control to occur.

It’s usually hard to specify customer preferences, or quality, in a production contract. It’s easy to specify sacrifice, if we can measure it. Time is immediately observed; quality is eventually observed. With employment via a time-wage, the costly giving up of other opportunities, measured in time, can be directly motivated (via daily or hourly wages), while quality is motivated in a delayed, discontinuous manner (by firing if employers and/or peers judge that quality of the work is too often bad). Third parties, say the guy who owned the shop across the street, could observe the workers arriving and leaving, and tell when they did so by the time. Common synchronization greatly reduced the opportunities for fraud involving that most basic contractual promise, the promise of time.

Once pay for time is in place, the basic incentives are in place – the employee is, verifiably, on the job for a specific portion of the day – so he might as well work. He might as well do the work, both quantity and quality, that the employer requires. With incentives more closely aligned by the calendar and the city bells measuring the opportunity costs of employment, to be compensated by the employer, the employer can focus observations on verifying the specific quantity and qualities desired, and the employee (to gain raises and avoid getting fired) focuses on satisfying them. So with the time-wage contract, perfected by northern and western Europeans in the late Middle Ages, we have two levels of the protocol in this relationship: (1) the employee trades away other opportunities to commit his time to the employer – this time is measured and compensated, (2) the employee is motivated, by (positively) opportunities for promotions and wage rate hikes and (negatively) by the threat of firing, to use that time, otherwise worthless to both employer and employee, to achieve the quantity and/or quality goals desired by the employer.

(from From "A Measure of Sacrifice")

Proxy measures also explain how money can depend on standards of nature instead of a singular trusted third party:

Although anatomically modern humans surely had conscious thought, language, and some ability to plan, it would have required little conscious thought or language, and very little planning, to generate trades. It was not necessary that tribe members reasoned out the benefits of anything but a single trade. To create this institution it would have sufficed that people follow their instincts to make obtain collectibles with the characteristics outlined below. (as indicated by proxy observations that make approximate estimations for these characteristics).Utilitarian jewelry: silver shekels from Sumeria. The recipient of a payment would weigh the segment of coil used as payment and could cut it at an arbitrary spot to test its purity. A wide variety of other cultures, from the Hebrews and the famously commercial Phoenicians to the ancient Celtic and Germanic tribes, used such forms of precious metal money long before coinage.

At first, the production of a commodity simply because it is costly seems quite wasteful. However, the unforgeably costly commodity repeatedly adds value by enabling beneficial wealth transfers. More of the cost is recouped every time a transaction is made possible or made less expensive. The cost, initially a complete waste, is amortized over many transactions. The monetary value of precious metals is based on this principle. It also applies to collectibles, which are more prized the rarer they are and the less forgeable this rarity is. It also applies where provably skilled or unique human labor is added to the product, as with art.

(from "Shelling Out: The Origins of Money")

The rational price of such a monetary commodity will be, ignoring more minor effects (e.g. the destruction of monetary units) the lesser of:

(1) the value a monetary unit is expected to add to future transactions, i.e. what it will save in transaction costs

(2) the cost of creating a new monetary unit

Since (1) cannot be objectively measured, but at best can only be intuitively estimated, as long as one is confident that (1)>(2) the cost of creating a monetary unit becomes a good proxy measure of the value of the monetary unit. If monetary units are not fungible, but their costs are comparable, their relative value can be well approximated by their relative costs even if (2)>=(1). If the expected value of a monetary unit drops below the replacement cost, gold miners (for example) stop creating new monetary units (in the case of gold miners, if their production costs exceed this expected value they stop mining gold), holding the money supply steady which minimizes inflation. If it goes above replacement costs more gold mines can be affordably worked, increasing the money supply as the economy grows without inflation. This all depends on having a secure floor to replacement costs, which is well approximated by gold and silver but violated by fiat currencies (as can be seen by for example comparing the rampant inflations of many 20th century fiat currencies against the quite mild, by comparison, inflations that followed discoveries such as Potosi silver in the 16th century and California gold in the 19th).

We often adapt institutions from prior similar institutions, and often by accident. So, for example, money can evolve from utilitarian commodities that have the best characteristics of money (securely storable, etc.) in a particular environment (e.g. cigarettes in a prison). But humans also have foresight and can reason by analogy, and so can design an exchange to trade new kinds of securities, a new kind of insurance service, or a new kind of currency.

Naturally such designs are still subject to a large degree of trial and error, of the creative destruction of the market. And they are subject to network externalities: to, roughly speaking, Metcalfe's law, which states the value of a network is proportional to the square of the number of its members, and that a network of one is useless. A telephone is useless unless somebody else you want to talk to also has a telephone. Likewise, currency is useless unless one has somebody to buy from or sell to who will take or give that currency. Indeed, with money the situation is even worse than most networks, because unless that person wants to do a corresponding sell or buy back to you at a later date, the money will get "stuck." It will have zero velocity. One needs either specific cycles to keep money circulating (as with the ancient kula ring), or a second currency with such cycles that can be exchanged for the first. Thus, the marketing problem of starting a new currency is formidable. Although Paypal was implementing just a payment system in dollars, not a new currency, it hit on a great strategy of general applicability: target specific communities of people that trade with each other (in Paypal's case, the popular eBay auction site).

But insisting that gold, silver, shells, online payment systems or currencies, etc. must be useful for some other purpose, such as decoration, before they can be used as money, is a terrible confusion, akin to insisting that an insurance service must start out as useful for something else, perhaps for stabling horses, before one can write the insurance contracts. Indeed many of us value precious metals and shells for decoration more for a reverse reason, which I explain in the above-linked essay on the origins of money.

Conclusion

The labor theory of value is wrong. Value is fundamentally a matter of subjective preferences. Nevertheless. Yoram Barzel's crucial idea of proxy measures allows us to understand why measures of labor and more generally measures of cost are so often and so usefully applied as measures of value in institutions, including wage contracts and non-fiat money.Friday, June 24, 2011

Agricultural consequences of the Black Death

Under a naive reading of Malthusian theory, yield (the amount of food or fodder produced per unit area) should have improved after the Black Death: a lower population abandoned marginally productive lands and kept the better yielding ones. Furthermore, the increased ratio of livestock to arable acres should have improved fertilization of the fields, as more nutrients and especially nitrogen moved from wastes (wild lands) and pastures to the arable.

But while marginal lands were indeed abandoned (or at least converted from arable to pasture), and the livestock/arable ratio indeed increased, it's not the case that yield improved after the Black Death. In fact, the overall (average) yield of all arable probably declined slightly, and the yields of particular plots of land that were retained as arable usually declined quite substantially. Yields declined and for the most part stayed low for several centuries thereafter. Other factors must have been work, factors stronger than the abandonment of marginally productive lands and the influence of more livestock on nutrient movement.

Per capita agricultural output (vertical axis) vs. population density (horizontal axis) in England before, during, and after the Black Death and through the agricultural revolutions of the succeeding centuries.

Per capita agricultural output (vertical axis) vs. population density (horizontal axis) in England before, during, and after the Black Death and through the agricultural revolutions of the succeeding centuries.The main such factor is that increasing yield without great technological improvement requires more intensive labor: extra weeding, extra transport of manures and soil conditioners, etc. Contrariwise, a less intensive use of land readily leads to falling yield. After the Black Death, labor was more expensive relative to land, so it made sense to engage in a far less intensive agriculture. The increase of pasture and livestock at the expense of the arable was a part of this. But letting the yield decline was another. What increased quite dramatically in the century after the Black Death was labor productivity and per capita standard of living (see first three points on the diagram, each covering an 80-year period from 1260 to 1499).

Indeed, the whole focus in the literature on improvements in "productivity" as yield in the agricultural revolution has been very misleading. The crucial part of the revolution, and the direct cause of the release of labor from the agricultural to the industrial and transport sectors, was an increase in farm labor productivity: more food grown with less labor. Over short timescales, that is without dramatic technological or institutional progress, farm labor productivity was inversely correlated with yield for the reasons stated. Higher labor productivity, certainly not higher yield, is what distinguished most of Europe for example from East and Southeast Asia, where under intensive rice cultivation, and abundant rain during warm summers, yield had long been far higher than in Europe. Per Malthus, however, population growth in these regions had kept pace with yield, resulting in a highly labor-intensive, low labor productivity style of agriculture, including much less use of draft animals for farm work and transport.

The bubonic plague was spread by rodents, who in turn fed primarily on grain stores. This also had a significant impact on agriculture, because grain-growing farmers and grain-eating populations were disproportionately killed and people fled areas that grew or stored grain for regions specializing in other kinds of agriculture. In coastal regions like Portugal there was a large move from grain farming to fishing. In Northern Europe there was a great move to pasture and livestock, and in particular a relative expansion of the unique stationary pastoralism that had started to develop there in the previous centuries, both due to the relative protection of pastoralists from the plague and the less labor-intensive nature of pastoralism after land had become cheaper relative to labor.

A final interesting effect is that the long trend from about the 11th to the 19th century in England of conversion of draft animals from oxen to horses halted temporarily in the century after the Black Death. The main reason for this was undoubtedly the reversion of arable to pasture or waste (wild lands). Oxen are, to use horse culture terminology, very "easy keepers". As ruminants they can convert cellulose to glucose for energy and horses can't. Oxen, therefore, became relatively cheaper than horses in an era with more wastes and less (or at least much less intensive use of) arable for growing fodder. This kind of effect occurred again much later on the American frontiers where there was a temporary, partial reversion from horses to oxen as draft animals given the abundance of wild lands relative to groomed pasture and arable for growing fodder. For example the famous wagon trains to California and Oregon were pulled primarily by oxen, which could be fed much better than horses in the wild grasslands and arid wastes encountered. However in England itself, the trend towards horses replacing oxen, fueled by the increasing growth of fodder crops, had resumed by 1500 and indeed accelerated in the 16th century, playing a central role in the transportation revolutions of the 15th through 19th centuries.

Saturday, June 11, 2011

Trotting ahead of Malthus

Progress in the Malthusian isocline in England from the 13th through 19th centuries, as, among many other factors, horses slowly replaced oxen for farm work and transport, the ratio of draft animals to people increased, and more arable land was devoted to fodder relative to food.(click to enlarge)

Progress in the Malthusian isocline in England from the 13th through 19th centuries, as, among many other factors, horses slowly replaced oxen for farm work and transport, the ratio of draft animals to people increased, and more arable land was devoted to fodder relative to food.(click to enlarge)

I have previously discussed Great Britain's unprecedented escape from the Malthusian trap. Such escapes require virtuous cycles, i.e. positive feedback loops that allow productive capital to accumulate faster than it is destroyed. There are a number of these in Britain in the era of escape from the Black Plague to the 19th century, but two of the biggest involved transportation.

One of these that I've identified was the fodder/horse/coal/lime cycle. More and better fodder led to more and stronger horses, which hauled (among other things) coal from the mines, initially little more than quarries, that had started opening up in northeastern England by the 13th century. Coal, like wood, was very costly to transport by land, but relatively cheap to ship by sea or navigable river. As fodder improved, horses came to replace oxen in the expensive step transporting goods, including coal, from mine, farm, or workshop to port and from port to site of consumption. Many other regions (e.g. in Belgium and China) had readily accessible coal, but none developed this virtuous cycle so extensively and early.

Among the early uses of coal was for heating, fuel for certain industrial processes that required heat (e.g. in brewing beer), and, most interestingly, for burning lime. Burned lime, slaked with water, could unlike the limestone it came from be easily ground into a fine powder. This great increase in the surface area of this chemical base, which let it de-acidify the soils on which it was applied. The soils of Britain tend to be rather acidic, which allows poisonous minerals, such as aluminum, to absorb into plants and blocks the absorption of needed nutrients (especially NPK -- nitrogen, phosphorous, and potassium). The application of lime thus increased the productivity of fields for growing both food and fodder, completing the virtuous cycle. This cycle operated most strongly during the period of the consistent progress in the English Malthusian isocline from the 15th through 19th centuries.

Another, related, but even more important cycle was the horse/transport and institutions/markets/specialization cycle. More and better horses improved the transport of goods generally, including agricultural goods. John Langdon [1] estimates that a team of horses could transport a wagon of goods twice as fast as a team of oxen, which suggests a factor of four increase in market area. Add to this the Western European innovations in sailing during the 15th century (especially the trading vessels and colliers that added lateen sails(s) to the traditional square sails, allowing them to sail closer into the wind), and market regions in much of Western Europe and especially Great Britain were greatly expanded in the 15th and 16th centuries.

Expanded markets in turn allowed a great elaboration of the division of labor. Adam Smith famously described how division of labor and specialization greatly improved the productivity of industry, but to some extent this also operated in British agriculture. Eric Kerridge [2] eloquently described the agricultural regions of Britain, each specializing in different crops and breeds of livestock. Some were even named after their most famous specializations: "Butter Country", "Cheese Country", "Cheshire Cheese Country", "Saltings Country", etc. These undoubtedly emerged during the 15th through 19th centuries, as prior to that time European agriculture had been dominated by largely self-sufficient manors. And closing the cycle, there emerged several localities that specialized in breeding a variety of horses. Most spectacularly different British regions during this period bred no less than three kinds of large draft horses: the Shire Horse, the Suffolk Punch (in that eastern English county) and the Clydesdale (in that Scottish county).

Institutions such as advertising and commercial law emerged or evolved to allow lower transaction cost dealings between strangers. This was probably by far the most non-obvious and difficult aspect of the problem of expanding markets. Greatly aiding in this evolution was the growth of literacy due to spread in Europe of the inexpensive books produced by printing especially in the 16th and later centuries. Where in the Middle Ages literacy had been the privilege of a religious elite, with very few on a manor literate beyond the steward, after the 15th century an ever increasing population could read advertisements, order goods remotely, read and even draft contracts.

Another effect of longer-distance transportation was to open up more remote lands, which had been too marginal to support self-sufficient agricultural, to reclamation for use in specialized, trade-dependent agriculture. The ability to lime soils that were otherwise too acidic also often contributed to reclamation.

There were many other improvements to English agriculture and transportation during these crucial centuries, but the above cycles were probably the most important up to the 19th century. Here I'll mention three important transportation improvements later in this period that in many ways show the culmination of the underlying trend: the development of the turnpike (private toll) roads in the 18th century and the associated development of scheduled transportation services, (2) a great expansion in the use of horse-drawn rail cars in coal and other mines, and (3) the development of river navigations largely in the 17th and 18th centuries, and canals largely in the 18th and early 19th centuries, again almost entirely through private investment and ownership.

As coal mines were dug deeper, they were increasingly flooded with water. Long before the steam engine, these mines were pumped by horse-powered gins. Here horses on a gin power an axle (bent through a Hooke universal joint) and belt, in this case powering an 18th century farmyard innovation, the threshing machine. The horses know to not step on the rapidly spinning axle. I'm going to guess that the horse gin was the inspiration for the merry-go-round:

Horse-drawn wagons on rails took coal from mines to docks on navigable rivers and (no small matter) pulled the empty wagons back uphill. Coal and other ores were hauled by horses over these wagonways over distances of a few kilometers. They were almost exclusively used for mines, quarries, clay pits, and the like, running from mine mouth to navigable water. Here's a horse pulling coal on a wagonway:

More horses pulling coal on rails:

Now the only thing left of horse-drawn rail are tourist relics:

Even in 1903 the London streets were still powered by horses (see if you can spot the two still-rare horseless carriages):

And now for a taste of the British river navigations and canals:

The Trent and Mersey canal, built in the late 18th century, could take you from one side of England to the other:

Where Romans had used aqueducts to move water, the British used them in their canal system to move goods where their canals needed to span valleys. A large number of aqueducts were built from stone in Britain during its canal boom in the 18th century. This was the original Barton Aqueduct on the Bridgewater Canal (1760s):

In the late 18th century the smelting of iron using coal instead of wood was perfected. Soon thereafter iron became cheap enough to use for constructing bridges and aqueducts. One result was the spectacular Pontcysyllte Aqueduct in Wales. What in the early 19th century was a towpath for horses is now a sidewalk for tourists. Careful, it's a long way down!

References

Eric Kerridge, The Farmers of Old England

John Langdon, Horses, Oxen, and Technological Innovation

Saturday, May 28, 2011

Bitcoin, what took ye so long?

The short answer about why it took so long is that the bit gold/Bitcoin ideas were nowhere remotely close to being as obvious gwern suggests. They required a very substantial amount of unconventional thought, not just about the security technologies gwern lists (and I'm afraid the list misses one of the biggest ones, Byzantine-resilient peer-to-peer replication), but about how to choose and put together these protocols and why. Bitcoin is not a list of cryptographic features, it's a very complex system of interacting mathematics and protocols in pursuit of what was a very unpopular goal.

While the security technology is very far from trivial, the "why" was by far the biggest stumbling block -- nearly everybody who heard the general idea thought it was a very bad idea. Myself, Wei Dai, and Hal Finney were the only people I know of who liked the idea (or in Dai's case his related idea) enough to pursue it to any significant extent until Nakamoto (assuming Nakamoto is not really Finney or Dai). Only Finney (RPOW) and Nakamoto were motivated enough to actually implement such a scheme.

The "why" requires coming to an accurate understanding of the nature of two difficult and almost always misunderstood topics, namely trust and the nature of money. The overlap between cryptographic experts and libertarians who might sympathize with such a "gold bug" idea is already rather small, since most cryptographic experts earn their living in academia and share its political biases. Even among this uncommon intersection as stated very few people thought it was a good idea. Even gold bugs didn't care for it because we already have real gold rather than mere bits and we can pay online simply by issuing digital certificates based on real gold stored in real vaults, a la the formerly popular e-gold. On top of the plethora of these misguided reactions and criticisms, there remain many open questions and arguable points about these kinds of technologies and currencies, many of which can only be settled by actually fielding them and seeing how they work in practice, both in economic and security terms.

Here are some more specific reasons why the ideas behind Bitcoin were very far from obvious:

(1) only a few people had read of the bit gold ideas, which although I came up with them in 1998 (at the same time and on the same private mailing list where Dai was coming up with b-money -- it's a long story) were mostly not described in public until 2005, although various pieces of it I described earlier, for example the crucial Byzantine-replicated chain-of-signed-transactions part of it which I generalized into what I call secure property titles.

(2) Hardly anybody actually understands money. Money just doesn't work like that, I was told fervently and often. Gold couldn't work as money until it was already shiny or useful for electronics or something else besides money, they told me. (Do insurance services also have to start out useful for something else, maybe as power plants?) This common argument coming ironically from libertarians who misinterpreted Menger's account of the origin of money as being the only way it could arise (rather than an account of how it could arise) and, in the same way misapplying Mises' regression theorem. Even though I had rebutted these arguments in my study of the origins of money, which I humbly suggest should be should be required reading for anybody debating the economics of Bitcoin.

There's nothing like Nakamoto's incentive-to-market scheme to change minds about these issues. :-) Thanks to RAMs full of coin with "scheduled deflation", there are now no shortage of people willing to argue in its favor.

(3) Nakamoto improved a significant security shortcoming that my design had, namely by requiring a proof-of-work to be a node in the Byzantine-resilient peer-to-peer system to lessen the threat of an untrustworthy party controlling the majority of nodes and thus corrupting a number of important security features. Yet another feature obvious in hindsight, quite non-obvious in foresight.

(4) Instead of my automated market to account for the fact that the difficulty of puzzles can often radically change based on hardware improvements and cryptographic breakthroughs (i.e. discovering algorithms that can solve proofs-of-work faster), and the unpredictability of demand, Nakamoto designed a Byzantine-agreed algorithm adjusting the difficulty of puzzles. I can't decide whether this aspect of Bitcoin is more feature or more bug, but it does make it simpler.

Monday, May 23, 2011

Lactase persistence and quasi-pastoralism

The lactase regulation mutation that spread most dramatically was in Europe, until by now it occurs in nearly the entire population of northwest European countries.

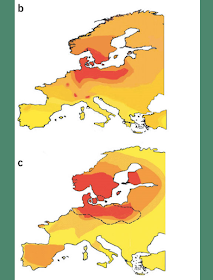

Modern frequency of lactase non-persistence as percent of the population in Europe.

Modern frequency of lactase non-persistence as percent of the population in Europe.This European lactase persistence allele soon came to be most concentrated along the Baltic and North Seas, what I call the core area of lactase persistence. Due to the heavy use of cattle in the core region, lactase persistence spread rapidly there, surpassing half the population by about 1500 BC.

The European lactase persistent populations played a substantial role in world history. The Baltic and North Sea coasts were the source of most of the cultures that conquered and divvied up the Western Roman Empire in the fourth through seventh centuries: Angles and Saxons (founded England), Ostrogoths and Lombards (Italy), Visigoths (Spain), Vandals (conquered north Africa), Frisians (the Low Countries) and Franks (France) among others. Later the lactase persistence core would produce the Vikings, who explored and conquered from Russia to North America and as far south as the Mediterranean, and the Normans, who invaded England, Sicly, and southern Italy led the Crusades among other exploits. Still later mostly lactase persistent populations would found the worldwide Portuguese, Spanish, French, Dutch and British empires and originate the agricultural and industrial revolutions.

Estimated spread of the European lactase persistence allele in the core region in northern Europe over time.

Estimated spread of the European lactase persistence allele in the core region in northern Europe over time.This was by no means the only gene evolving in the core. Indeed, the same cattle-heavy agriculture of northern Europe, probably originating in and migrating from central Europe, also gave rise to a great diversity of genes encoding cow milk proteins.

We found substantial geographic coincidence between high diversity in cattle milk genes, locations of the European Neolithic cattle farming sites (>5,000 years ago) and present-day lactose tolerance in Europeans. This suggests a gene-culture coevolution between cattle and humans. [ref]

(b) diversity of cow milk protein alleles, (c) frequency of human lactase persistence.

(b) diversity of cow milk protein alleles, (c) frequency of human lactase persistence.Associated with the lactase persistence core was a unique system of agriculture I call quasi-pastoralism. It can be distinguished from the normal agriculture that was standard to civilization in southern Europe, the Middle East, and South and East Asia, in having more land given up to pasture and arable fodder crops than to arable food crops. Nearly all agriculture combined livestock with food crops, due to the crucial role of livestock in transporting otherwise rapidly depleted nutrients, especially nitrogen, from the hinterlands to the arable. However, as arable produces far more calories, and about as much protein, per acre, civilizations typically maximized their populations by converting as much of their land as possible to arable and putting almost all the arable to food crops instead of fodder, leaving only enough livestock for plowing and the occasional meat meal for the elite. Quasi-pastoral societies, on the other hand, devoted far more land to livestock and worked well where the adults could directly consume the milk.

Quasi-pastoralism can be distinguished from normal, i.e. nomadic, pastoralism in being stationary and having a substantial amount of land given over to arable crops, both food (for the people) and fodder (for the livestock). A precondition for quasi-pastoralism was the stationary bandit politics of civilization rather than the far more wasteful roving bandit politics of nomads.

Compared to the normal arable agriculture of the ancient civilizations, quasi-pastoralism lowered the costs of transporting food -- both food on hoof and, in more advanced quasi-pastoral societies such as late medieval England, food being transported by the greater proportion of draft animals. This increased the geographical extent of markets and thus the division of labor. England and the Low Countries, among other core areas, gave rise to regions that specialized in cheese, butter, wool, and meat of various kinds (fresh milk itself remained hard to transport until refrigeration). A greater population of draft animals also made grain and wood (for fuel and construction) cheaper to transport. With secure property rights, investments in agricultural capital could meet or exceed those in a normal arable society. The population of livestock had been mainly limited, especially in northern climates, by the poor pasture available in the winter and early spring. While hay -- the growth of fodder crops in the summer for storage and use in winter and spring -- is reported in the Roman Empire, the first known use of a substantial fraction of arable land for hay occurred in the cattle-heavy core in northern Europe.

England's unprecedented escape from the Malthusian trap (click to enlarge).

England's unprecedented escape from the Malthusian trap (click to enlarge).Hay, especially hay containing large proportions of nitrogen-rich vetch and clover, allowed livestock populations in the north to greatly increase. The heavy use of livestock made for a greater substitution of animal for human labor on the farm as well as for transport and war, leading to more labor available for non-agricultural pursuits such as industry and war. The great population of livestock in turn provided more transport of nitrogen and other otherwise rapidly depleted nutrients from hinterlands and creek-flooded meadows to arable than in normal arable societies, leading to greater productivity of the arable that could as much as offset the substantial proportion of arable devoted to fodder. The result was that agricultural productivity by the 19th century was growing so rapidly that it outstripped even a rapidly growing population and Great Britain became the first country to escape from the Malthusian trap.

There are thus, in summary, deep connections between the co-evolution of milk protein in cows and lactase persistence in humans, the flow of nitrogen and other crucial nutrients from their sources to the fields, quasi-pastoralism with its stationary banditry and secure property rights, and the eventual agricultural and industrial revolutions of Britain, which we have just begun to explore.

Tuesday, February 15, 2011

Some speculations on the frontier below our feet

One possible fix to this earth-blindness is the neutrino, and more speculatively and generally, dark matter. We can detect neutrinos and anti-neutrinos by (I'm greatly oversimplifying here, physicists please don't cringe) setting up big vats of clear water in complete darkness and lining them with ultra-sensitive cameras. The feature of neutrinos is that they rarely interact with normal matter, so that most of them can fly from their source (nuclear reactions in the earth or sun) through the earth and still be detected. The bug is that almost all of them fly through the detector, too. Only a tiny fraction hit a nucleus in the water and interact, giving off a telltale photon (a particle of light) which is picked up by one of the cameras. It is common now to detect neutrinos from nuclear reactors and the sun, and more recently we have started using some crude instruments to detect geo-neutrinos (i.e. neutrinos or anti-neutrinos generated by the earth not the sun). With enough vats and cameras we may be able to detect enough of these (anti-)neutrinos from nuclear reactions (typically radioactive decays) in the earth's crust to make a detailed radioisotope map (and thus go a long way towards a detailed chemical map) of the earth's interior. For the first time we'd have detailed pictures of the earth's interior instead of very indirect and often questionable inferences. A 3D Google Earth. These observatories may also be a valuable intelligence tool, detecting secret nuclear detonations and reactors being used to construct nuclear bomb making material, via the tell-tale neutrinos these activities give off.

Other forms of weakly interacting particles, the kind that probably make up dark matter, may be much more abundant but interact even more weakly than neutrinos. So weakly we haven't even detected them yet. They're just the best theory we have to explain why galaxies hang together: if they consisted only of the visible matter they should fly apart. Nevertheless, depending on what kinds of dark particles we discover, and on what ways they weakly interact with normal matter, we may find more ways of taking pictures of the earth's interior.

What might we find there? One possibility: an abundance of hydrogen created by a variety of geological reactions and sustained by the lack of oxygen. Scientists have discovered that the predominant kinds of rocks in the earth's crust contain quite a bit of hydrogen trapped inside them: on average about five liters of hydrogen per cubic meter of rock. This probably holds at least to the bottom of the lithosphere. If so that region contains about 150 million trillion liters of hydrogen.

Sufficiently advanced neutrino detectors might be able to see this hydrogen via its tritium, which when it decays gives off a neutrino. Tritium with its half-life of about 12 years is very rare, but is created when a more common hydrogen isotope, deuterium, captures a neutrino from a more common nuclear event (the decay of radioisotopes that are common in the earth's crust). About one-millionth of the deuterium in the heavy water moderating a nuclear reactor is converted into tritium in a year. This rate will be far less in the earth's interior but still may be significant enough compared to tritium's half-life that a sufficiently sensitive and calibrated (with respect to the much greater stream of such neutrinos coming from the sun) neutrino detector of the future may detect hydrogen via such geotritium-generated neutrinos. However, the conversion of deuterium to tritium in the earth's core may be so rare that we will be forced to infer the abundance of hydrogen from the abundance of other elements. Almost all elements have radioisotopes that give off neutrinos when they decay, and most of these are probably much more common in the earth's core than tritium.

Another possibility for detecting hydrogen is, instead of looking for geo-neutrinos, to look at how the slice of earth one wants to study absorbs solar neutrinos. This would require at least two detectors, one to look at the (varying) unobstructed level of solar neutrinos and the other lined up so that the geology being studied is between that detector and the sun. This differential technique may work even better if we have a larger menagerie of weakly interacting particles ("dark matter") to work with, assuming that variations in nuclear structure can still influence how these particles interact with matter.

It's possible that a significant portion the hydrogen known to be locked into the earth's rocks has been freed or can be freed merely by the process of drilling through that rock, exposing the highly pressurized hydrogen in deep rocks to the far lower pressures above. This is suggested by the Kola Superdeep Borehole, one of those abandoned Cold War super-projects. In this case instead of flying rockets farther than the other guy, the goal was to drill deeper than the other guy, and the Soviets won this particular contest: over twelve kilometers straight down, still the world record. They encountered something rarely encountered in shallower wells: a "large quantity of hydrogen gas, with the mud flowing out of the hole described as 'boiling' with hydrogen."

The consequences of abundant geologic hydrogen could be two-fold. First, since a variety of geological and biological processes convert hydrogen to methane (and the biological conversion, by bacteria appropriately named "methanogens", is the main energy source for the deep biosphere, which probably substantially outweighs the surface biosphere), it suggests that our planet's supply of methane (natural gas) is far greater than of oil or currently proven natural gas reserves, so that (modulo worries about carbon dioxide in the atmosphere) our energy use can continue to grow for many decades to come courtesy of this methane.

Second, the Kola well suggests the possibility that geologic hydrogen itself may become an energy source, and one that frees us from having to put more carbon dioxide in the atmosphere. The "hydrogen economy" some futurists go one about, consisting of fuel-cell-driven machinery, depends on making hydrogen which in turn requires a cheap source of electricity. This is highly unlikely unless we figure out a way to make nuclear power much cheaper. But by contrast geologic hydrogen doesn't have to be made, it only has to be extracted and purified. If just ten percent of the hydrogen in the lithosphere turns out to be recoverable over the next 275 years, that's enough by my calculations to enable a mild exponential growth in energy usage of 1.5%/year over that entire period (starting with the energy equivalent usage of natural gas today). During most of that period human population is expected to be flat or falling, so practically that entire increase would be in per capita usage. To put this exponential growth in perspective, at the end of that period a person would be consuming, directly or indirectly, about 330 times as much hydrogen energy as they consume in natural gas energy today. And since it's hydrogen, not hydrocarbon, burning it would not add any more carbon to the atmosphere, just a small amount of water.

Luckily our drilling technology is improving: the Kola well took nearly two decades to drill at a leisurely pace of about 2 meters per day. Modern oil drilling often proceeds at 200 meters/day or higher, albeit not to such great depths. Synthetic diamond, used to coat the tips of the toughest drills, is much cheaper than during the Cold War and continues to fall in price, and we have better materials for withstanding the high temperatures and pressures encountered when we get to the bottom of the earth's crust and proceeding into the upper mantle (where the Kola project got stymied: their goal was 15 kilometers down).

A modern drill bit studded with polycrystalline diamond

A modern drill bit studded with polycrystalline diamond

Of course, I must stress that the futuristic projections given above are quite speculative. We may not figure out how to affordably build a network of neutrino detecting vats massive enough or of high enough precision to create detailed chemical maps of the earth's interior. And even if we create such maps, we may discover not so much hydrogen, or that the hydrogen is hopelessly locked up in the rocks and that the Kola experience was a fluke or misinterpretation. Nevertheless, if nothing else this exercise shows, despite all the marvelous stargazing science that we have done, how much mysterious ground we have below our shoes.

Wednesday, February 09, 2011

Great stagnation or external growth?

These pessimistic observations of long-term economic growth are in many ways a much needed splash of cold water in the face for the Kurzweilian "The Singularity is Near" crowd, the people who think nearly everything important has been growing exponentially. And it is understandable for an economist to observe a great stagnation because there has indeed been a great stagnation in real wages as economists measure them: real wages in the developed world grew spectacularly during most of the 20th century but have failed to grow during the last thirty years.

Nevertheless Cowen et. al. are being too pessimistic, reacting too much to the recent market problems. (Indeed the growing popularity of pessimistic observations of great stagnations, peak oil, and the like strongly suggest it's a good time to be long the stock markets!) These melancholy stories fail to take into account the great recent increases in value that are subjectively obvious to almost all good observers who have lived through the last twenty years but that economists have been unable to measure.

In many traditional industries, such as transportation and real estate, the pessimistic thesis is largely true. The real costs of commuting, buying real estate near where my friends are and where I want to work, of getting a traditional college education, and a number of other important things have risen significantly over the past twenty years. These industries are going backwards, becoming less efficient, delivering less value at higher cost: if we could measure their productivity it would be falling.

On the other hand, the costs of manufacturing goods whose costs primarily reflect manufacturing rather than raw materials has fallen substantially over the least twenty years, at about the same rate as in prior decades. Of course, most of these gains have been in the developing and BRICs countries, for a variety of reasons, such as the higher costs of regulation in the developed world and the greater access to cheaper labor elsewhere, but those of us in the U.S., Europe and Japan still benefit via cheap imports that allow us to save more of our money for other things. But perhaps even more importantly, outside of traditional education and mass media we have seen a knowledge and entertainment sharing revolution of unprecedented value. I argue that what looks like a Great Stagnation in the traditional market economy is to a significant extent a product of a vast growth in economic value that has occurred on the Internet and largely outside of the traditional market economy, and a corresponding cannibalization of and brain drain from traditional market businesses.

Most of the economic growth during the Internet era has been largely unmonetized, i.e. external to the measurable market. This is most obvious for completely free services like Craig's List, Wikipedia, many blogs, open source software, and many other services based on content input by users. But ad-funded Internet services also usually create a much greater value than is captured by the advertising revenues. These include search, social networking, many online games, broadcast messaging, and many other services. Only a small fraction of the Internet's overall value has been monetized. In other words, the vast majority of the Internet's value is what economists call an externality: it is external to the measurable prices of the market. Of course, since this value is unmeasured, this thesis is extremely hard to prove or disprove, and can hardly be called scientific; mainly it just strikes me as subjectively obvious. "Social science" can't explain most things about society and this is one of them.

What's worse for the traditional market (as opposed to this recent tsunami of unmonetized voluntary information exchange), this tidal wave of value has greatly reduced the revenues of certain industries. The direct connection the Internet provides between authors and the readers put out of business many bookstores. Online classifieds and free news sources have cannibalized newspapers and magazines. Wikipedia is destroying demand for the traditional encyclopedia. Free and cut-price music has caused a substantial decline in music industry revenues. So the overall effect is a great increase in value combined with a perhaps small, but I'd guess significant reduction in what GDP growth would have been without the Internet.

What are some of the practical consequences? Twenty years ago most smart people did not have an encyclopedia in the home or at the office. Now the vast majority in the developed and even hundreds of millions in the BRICs countries do, and many even have it in the car or on the train. Twenty years ago it was very inconvenient and cost money to place a tiny classified ad that could only be seen in the local newspaper; now it is very easy and free to place an ad of proper length that can be seen all over the world. Search engines combined with mass voluntary and generally free submission of content to the Internet has increased the potential knowledge we have ready access to thousands-fold. Social networking allows us to easily reconnect with old friends we'd long lost contact with. Each of us has access to much larger libraries of music and written works. We have access to a vast "long tail" of specialized content that the traditional mass media never provided us. The barriers to a smart person with worthwhile thoughts getting fellow humans to attend to those thoughts are far lower than what they were twenty years ago. And almost none of this can be measured in market prices, so almost none of it shows up in the economic figures on which economists focus.

Cowen suggests that external gains of similar magnitude occurred in prior productivity revolutions, but I'm skeptical of this claim. A physical widget can be far more completely monetized than a piece of information, because it is excludable: if you don't pay, you don't get the widget. As opposed to information that computers readily copy. (The most underappreciated function of computers is that they are far better copy machines than the paper copiers). It's true that competition drove down prices. But the result was still largely monetized as greater value caused increased demand, whereas growth in the use of search engines, Twitter, Wikipedia, Facebook etc. largely just requires adding a few more computers that now cost far less than the value they convey. (Yes, I'm well aware of scaling issues in software engineering, but they typically don't require much more than a handful of smart computer scientists to solve). Due to Moore's Law the computers that drive the Internet have radically increased in functionality per dollar since the dawn of the Internet. Twitter's total capital equipment purchases, R&D, and user acquisition expenditures are less than fifty cents per registered user and these capital investment costs per user continue to drop at a ferocious rate for Internet businesses and non-profits.

The brain drain from traditional industries can be seen in, for example, the great increase in the proportion of books on computer programming, HTML, and the like on bookstore shelves to traditional engineering and technical disciplines from mechanical engineering to plumbing. It is not so blatant in the relative growth of computer science and electrical engineering relative to other engineering disciplines, but that's just the tip of the iceberg and vast numbers of non-computer scientists, including many with engineering degrees or technical training in other areas, have ended up as computer programmers.

Fortunately, the Internet is giving a vast new generation of smart people access to knowledge who never had it before. The number of smart people who can learn an engineering discipline has probably increased by nearly a factor of ten over the last twenty years (again largely in the BRICs and developing world of course). The number who can actually get a degree of course has not -- which gives rise to a great economic challenge -- what are good ways for this vast new population of educated smart people to prove their intelligence and knowledge when traditional education with its degrees of varying prestige is essentially a zero-sum status game that excludes them? How do we get them in regular social contact with more traditionally credentialed smart people? The Internet may solve much of the problem of finding fellow smart people who share our interests and skills, but we still emotionally bond with people over dinner not over Facebook.

As for the great stagnation in real wages in particular, the biggest reason is probably the extraordinarily rapid pace at which the BRICs and developing world has become educated and accessible to the developed world since the Cold War. In other words, outsourcing has in a temporary post-Cold-War spree outraced the ability of most of us in the developed world to retrain to the more advanced industries. The most unappreciated reason, and the biggest reason retraining for newer industries has been so difficult, is that unmonetized value provides no paying jobs, but may destroy such jobs when it causes the decline of some traditionally monetized industries. On the Internet the developed world is providing vast value to the BRICs and developing world, but that value is largely unmonetized and thus produces relatively few jobs in the developed world. The focus of the developed world on largely unmonetized, though extremely valuable, activities has been a significant cause of wage stagnation in the developed world and of skill and thus wage increases in the developing world. Whereas before they were buying our movies, music, books, and news services, increasingly they are just getting our free stuff on the Internet. The most important new industry of the last twenty years has been mostly unmonetized and thus hasn't provide very many jobs to retrain for, relative to the value it has produced.

And of course there are the challenges of the traditional industries that gave us the industrial revolution and 20th century economic growth in the first place. Starting with the most basic and essential: agriculture, extraction, and mass manufacturing. By no means should these be taken for granted; they are the edifice on which all the remainder rests. Gains in agriculture and extraction may be diminishing as the easy pickings (given sufficiently industrial technology and a sufficiently elaborated division of labor) of providing scarce nutrients and killing pests in agriculture and the geologically concentrated ores are becoming history. Can the great knowledge gains from the Internet be fed back to improve the productivities of our most basic industries, especially in the face of Malthusian depletion of the low hanging fruit of soil productivity and geological wealth? That remains to be seen, but despite all the market troubles and run-up in commodity prices, which have far more to do with financial policies than with the real costs of extracting commodities, I remain optimistic. We still have very large and untapped physical frontiers. These tend to be, for the near future, below us rather than above us, which flies in the face of our spiritual yearnings (although for space fans here is the most promising possible exception to this rule I have encountered). The developing world may win these new physical frontiers due to the high political value the developed world places on environmental cleanliness, which has forced many dirty but crucial businesses overseas. Industries that involve far more complex things, like medicine and the future of the Internet itself, are far more difficult to predict. But the simple physical frontiers as well as the complex medical and social frontiers are all there, waiting for our new generations with their much larger number of much more knowledgeable people to tap them.

Wednesday, January 26, 2011

Some hard things are easy if explained well

Dark matter:

Chemical reactions, fire, and photosynthesis:

How trains stay on their tracks and go through turns:

And just for fun, the broken window fallacy:

Saturday, January 22, 2011

Tech roundup 01/22/11

Bitcoin, an implementation of the bit gold idea (and another example of where the order of events is important), continues to be popular.

It is finally being increasingly realized that there are many "squishy" areas where scientific methods don't work as well as they do in hard sciences like physics and chemistry. Including psychology, significant portions of medicine, ecology, and I'd add the social sciences, climate, and nutrition. These areas are often hopelessly infected with subjective judgments about results, so it's not too surprising that when the the collective judgments change about what constitutes, for example, the "health" of a mind, body, society, or ecosystem, that the "results" of experiments as defined in terms of these judgments change as well. See also "The Trouble With Science".

Flat sats (as I like to call them) may help expand our mobility in the decades ahead. Keith Lofstrom proposes fabricating an entire portion of a phased array communications satellite -- solar cells, radios, electronics, computation, etc. -- on a single silicon wafer. Tens of thousands or more of these, each nearly a foot wide, may be launched on a single small rocket. If they're thin enough, orientation and orbit can be maintained using light pressure (like a solar sail). Medium-term application: phased array broadcast of TV or data allows much smaller ground antennas, perhaps even satellite TV and (mostly downlink) Internet in your phone, iPad, or laptop. Long-term: lack of need for structure to hold together an array of flat sats may bring down the cost of solar power in space to the point that we can put the power-hungry server farms of Internet companies like Google, Amazon, Facebook, etc. in orbit. Biggest potential problem: large numbers of these satellites may both create and be vulnerable to micrometeors and other space debris.

Introduction to genetic programming, a powerful evolutionary machine learning technique that can invent new electronic circuits, rediscover Kepler's laws from orbital data in seconds, and much more, as long as it has fairly complete and efficient simulations of the environment it is inventing or discovering in.

Exploration for underwater gold mining is underway. See also "Mining the Vast Deep."

Monday, January 17, 2011

"The Singularity"

One of the basic ideas behind this movement, that computers can help make themselves smarter, and that growth that for a time looks exponential, or even super-exponential in some dimensions and may end up much faster than today may result, is by no means off the wall. Indeed computers have been helping improve future versions of themselves at least since the first compiler and circuit design software was invented. But "the Singularity" itself is an incoherent and, as the capitalization suggests, basically a religious idea. As well as a nifty concept for marketing AI research to investors who like very high risk and reward bets.

The "for a time" bit is crucial. There is as Feynman said "plenty of room at the bottom" but it is by no means infinite given actually demonstrated physics. That means all growth curves that look exponential or more in the short run turn over and become S-curves or similar in the long run, unless we discover physics that we do not now know, as information and data processing under physics as we know it are limited by the number of particles we have access to, and that in turn can only increase in the long run by at most a cubic polynomial (and probably much less than that, since space is mostly empty).

Rodney Brooks thus calls the Singularity "a period" rather than a single point in time, but if so then why call it a singularity?

As for "the Singularity" as a point past which we cannot predict, the stock market is by this definition an ongoing, rolling singularity, as are most aspects of the weather, and many quantum events, and many other aspects of our world and society. And futurists are notoriously bad at predicting the future anyway, so just what is supposed to be novel about an unpredictable future?

The Singularitarian notion of an all-encompassing or "general" intelligence flies in the face of how our modern economy, with its extreme specialization, works. We have been implementing human intelligence in computers little bits and pieces at a time, and this has been going on for centuries. First arithmetic (first with mechanical calculators), then bitwise Boolean logic (from the early parts of the 20th century with vacuum tubes), then accounting formulae and linear algebra (big mainframes of the 1950s and 60s), typesetting (Xerox PARC, Apple, Adobe, etc.), etc. etc. have each gone through their own periods of exponential and even super-exponential growth. But it's these particular operations, not intelligence in general, that exhibits such growth.

At the start of the 20th century, doing arithmetic in one's head was one of the main signs of intelligence. Today machines do quadrillions of additions and subtractions for each one done in a human brain, and this rarely bothers or even occurs to us. And the same extreme division of labor that gives us modern technology also means that AI has and will take the form of these hyper-idiot, hyper-savant, and hyper-specialized machine capabilities. Even if there was such a thing as a "general intelligence" the specialized machines would soundly beat it in the marketplace. It would be very far from a close contest.

Another way to look at the limits of this hypothetical general AI is to look at the limits of machine learning. I've worked extensively with evolutionary algorithms and other machine learning techniques. These are very promising but are also extremely limited without accurate and complete simulations of an environment in which to learn. So for example in evolutionary techniques the "fitness function" involves, critically, a simulation of electric circuits (if evolving electric circuits), of some mechanical physics (if evolving simple mechanical devices or discovering mechanical laws), and so on.

These techniques only can learn things about the real world to the extent such simulations accurately simulate the real world, but except for extremely simple situations (e.g. rediscovering the formulae for Kepler's laws based on orbital data, which a modern computer with the appropriate learning algorithm can now do in seconds) the simulations are usually very woefully incomplete, rendering the results usually useless. For example John Koza after about 20 years of working on genetic programming has discovered about that many useful inventions with it, largely involving easily simulable aspects of electronic circults. And "meta GP", genetic programming that is supposed to evolve its own GP-implementing code, is useless because we can't simulate future runs of GP without actually running them. So these evolutionary techniques, and other machine learning techniques, are often interesting and useful, but the severely limited ability of computers to simulate most real-world phenomena means that no runaway is in store, just potentially much more incremental improvements which will be much greater in simulable arenas and much smaller in others, and will slowly improve as the accuracy and completeness of our simulations slowly improves.

The other problem with tapping into computer intelligence -- and there is indeed after a century of computers quite a bit of very useful but very alien intelligence there to tap into -- is the problem of getting information from human minds to computers and vice versa. Despite all the sensory inputs we can attach to computers these days, and vast stores of human knowledge like Wikipedia that one can feed to them, almost all such data is to a computer nearly meaningless. Think Helen Keller but with most of her sense of touch removed on top of all her other tragedies. Similarly humans have an extremely tough time deciphering the output of most software unless it is extremely massaged. We humans have huge informational bottlenecks between each other, but these hardly compare to the bottlenecks between ourselves and the hyperidiot/hypersavant aliens in our midst, our computers. As a result he vast majority of programmer time is spent working on user interfaces and munging data rather than on the internal workings of programs.

Nor does the human mind, as flexible as it is, exhibit much in the way of some universal general intelligence. Many machines and many other creatures are capable of sensory, information-processing, and output feats that the human mind is quite incapable of. So even if we in some sense had a full understanding of the human mind (and it is information theoretically impossible for one human mind to fully understand even one other human mind), or could somehow faithfully "upload" a human mind to a computer (another entirely conjectural operation, which may require infeasible simulations of chemistry), we would still not have "general" intelligence, again if such a thing even exists.

That's not to say that many of the wide variety of techniques that go under the rubric "AI" are not or will not be highly useful, and may even lead to accelerated economic growth as computers help make themselves smarter. But these will turn into S-curves as they approach physical limits and the idea that this growth or these many and varied intelligences are in any nontrivial way "singular" is very wrong.

Sunday, January 16, 2011

Making a toaster the hard way

(h/t Andrew Chamberlain)

For more on the economics at work here, see my essay Polynesians vs. Adam Smith.